AI for everyday things

This is the summary of the talk by Gaurav Nemade presented at Git Commit Show 2019.

About the Speaker

Git Commit show welcomes our next speaker connecting Gaurav Nemade. He is a product manager at Google AI. Artificial intelligence is an approach to train machines to think like the human brain.it is an effort to make machines work smartly and efficiently. To know more about it and how it is affecting our lives at a vast level, hear from the best. Our focused topic for today’s discussion is “AI for everyday things”.

Summary of the talk

A huge warm welcome to Gaurav. He will take us on a journey of how AI is there in day- to-day lives.

Our agenda revolves around these topics-

- What is AI?

- How AI is being used for everyday things

- What is the future like with AI?

Gaurav-

I am gonna give many examples of how AI is changing our day to day activities and how it is changing the world today. I will provide some inspiration to solve problems using AI.

A lot of people are usually super confused about what AI is. They see movies and they are usually scared that AI is a bad thing and some people don't know if it is like technology or a software. So what exactly is AI? So let me just take a step back and explain in layman terms.

AI is basically a very simple science which is part of computer science that aims towards making artificial things more intelligent. So it's not new in our world we've been interacting with artificial intelligent things for decades. So just to give you an example, when you set your temperature on your air conditioning using your remote. That's sort of an artificial intelligent machine because it sort of takes decisions on its own. So you said that “hey I want the temperature in the room to be twenty-one degree Celsius”' so it continues cooling the temperature of the room until the temperature becomes 21 and at that time it makes a decision and stops. So it's a smarter machine than kind of turning on an air conditioner and it is slightly intelligent. Although it's not super intelligent but it's slightly intelligent. Similarly, if you ever played chess before. Like I used to play chess a lot. There was a computer mode here and the way it used to operate was like a decision tree. If the chessboard pieces are in so-and-so position, the computer should play so-and-so the next move. So it was a very decision tree based and somebody had manually coded the rules but nevertheless it was to make that system intelligent. You might wonder why it is such a big deal at this point of time.

Let me explain to you this example of temperature setting. So let's take an example of how you set the temperatures at your house. Let's say you get up at 7:00 A.M and then you set the temperature to 25 degree Celsius from 22 degree Celsius. Then later from 9:00 to 5:00 you turn it off because you are at work and then in the evening when you watch television you like it at 24 degrees and then finally when you go to sleep you like it slightly cooler and you keep it at 22 degree Celsius. So the way you can do it right now is you can either use the remote and turn off the temperature to the relevant temperature setting.

Every single time there are some rule-based thermostats which allow you to set the temperature on a daily basis like 7:00 a.m. this 9:00 a.m. this and so on. But what if we had a system that can analyze what you do on a daily basis and automatically learns your daily timetable of using AC. Suppose it learns what temperature to keep at what point of time. So essentially you are going from setting manual rules about what the temperature of the room should be to an automated decision and actioning system where it can understand how you like your temperature throughout the day and take automated actions like turning the temperature during the day. There is a product called nest thermostats which does exactly that, it tries to understand what your patterns are during the first five days. And when you start using it and from the sixth day it starts adapting itself for these patterns. The number of days is just an example. It basically tries to understand your patterns in previous days and sets the temperature accordingly over the next few days. This is the power of AI.

Coming back to the question why is it such a big deal now so AI has become such a big deal in the last 4-5 years. Primarily because of a technique called machine learning. It is basically the same thing that I talked about. Through machine learning, we are trying to build a model that can analyze all the data from the past few days and based on that it tries to predict what should be the future instance. Let's take an example to make it more clear. So let's say if I give you some data about the kind of movies I like for example, Avengers, Spider-Man, Titanic, Shutter Island, and Ironman.

Among these, let's say I like avengers and Spider-Man but I don't like titanic and shutter island.

I was to ask you based on this data that would I like Superman more or would I like Fight Club more? I think for a human it's not very difficult to understand or predict that since I like Avengers and Spider-Man and all of them are superhero movies so I would probably Superman as well and since I don't like Titanic and Shutter Island I would probably not like Fight Club as much. So machine learning essentially tries to do the same thing. It takes a lot of data and based on that it tries to predict which of the given options might I like or dislike.

Now the question is why has it become such a big deal in the last few years?

Two major reasons for that are

1. machine learning algorithms at the end of the day are complicated mathematical equations and to solve these equations, we need a huge amount of compute power. So basically flops floating-point operations per minute. So we did not have huge amounts of computing power until then. In around 2010 when the GPU industry really started picking up and we should probably thank the gaming industry for that. So the GPU industry kind of picked up and we got all the computation needed and hence We started training more complicated machine learning models which can give super accurate results.

2. The next thing that played an important role was the explosion of data. So you may have heard of the term big data a lot in the last few years. Big data is essentially like a lot of data being collected through online systems.

A lot of data plus a lot of compute power, the machine learning algorithms and the mathematical equations, these sort of came together really well in last five to six years and that is why we are seeing so much advances in machine learning and AI in like six to seven years. So just to highlight that a lot of people get confused between what is ML and what is AI and how are they related or How are they different from each other?

So in layman terms ML is a way to power and it is a technique that we use to create artificial intelligence within machine learning. There is a very specific domain called deep learning which is basically aligned to how our brain thinks.

Deep learning is one of the ways through which one of the techniques within machine learning that we use to generate artificial intelligence all right real quick.

What are the subfields within AI that are hot right now?

The first one is computer vision so basically you get an image or you get a video and corresponding to that image we tell you like what that image is about.

The image is about a dog so a computer algorithm will basically predict that this image is of a dog. The other main area is language understanding so if I have to predict sentiment of let's say a review that a person wrote on google map or on Yelp for example there are models that can predict a message like I hate that restaurant. The sentiment is negative so that falls under the category of language understanding the Third major category is speech recognition so when somebody speaks, these get recorded as waveforms and then from these waveforms can we generate a text like a transcribe text of what exactly is the user talking about. The fourth one is something that we call as recommendation systems. The example that I gave about the movies like would I like Superman or Fight Club based on my previous history that kind of falls in the bucket of recommendation systems. So you recommend something to the users like it can be movies, apps on place or iOS or it could be videos on YouTube and so on. There are several other fields within AI.

I'm not going to cover all of them but I think you get a sense of what the major things are and I'm going to talk about mostly examples in this field to keep things more relevant. What exactly we are trying to do is to make artificial things more intelligent and enable them to make predictions and decisions using machine learning.

Let's jump into the second part which is high for everyday things so here I'm going to primarily talk about how people are using AI in day-to-day life and how it is transforming things. Let's start with a field that we don't spend a lot of time on, probably being heavily involved in the technological community but I really love this example and that's why I won't start with this.

You probably are aware of self-driving cars. There is a company called vamo which is an alphabet company which launched the first self-driving taxis in the world and there is a video where you can check where the Moe cars are being operated. This thing is super cool like it definitely freaks people out because there is nobody at the driving field. And when you see that for the first time you're like holy crap like how will this work but these kinds of technologies are advanced. I see a lot of these self-driving cars and companies in Silicon Valley here and the way they build these models are basically a hybrid of advanced computer vision so they have a lot of sensors and cameras across the car and they also use some speech technology to understand like if somebody's honking or if there is like some sort of unusual noise coming from the car or road etc. and then they kind of decide what the car should be doing in the next instance. The way this is done through a technique called reinforcement learning which I'm not going to talk about. You can search about it if you are interested.

You may have seen smart replies in apps or in emails. These are basically small chips that you see within the conversation and help you easily respond to conversation. They basically take the context of the previous messages into account and based on that predict what might be potential responses that you may send to the sender. So this is at the core of natural language processing. Natural language understanding is a super useful feature in apps like LinkedIn, Gmail, Android messages and so on. There is a startup in Silicon Valley called YCA-- and they are basically helping businesses. This is one of the things that I really really don't like but it's very important to keep track of things and know who is assigned what sort of action items and make progress for the project. So what it basically does is it joins your meeting and listens to what is being said. Then use a speech recognition to transcribe whatever is said and based on that, there's a bunch of natural language understanding stuff to identify “hey this particular task was given to kora or this task was given to Henry” and then basically help you navigate the meeting and beyond. So it's a very cool technology and you can check it out if you have a start-up or if you want to ever run a start-up without taking meeting notes. This might be super cool.

The next area that I want to talk about is retail and payments so there are a lot of object detection API available these days. what they essentially do is you click a picture of an object you are interested in. In this case you're probably interested in the trouser or the shoe so you can tap on the shoe. Now what it does is it tries to identify let's say within Amazon carousal here are the 100,000 shoes that Amazon sells. After taking the picture of this shoe, it tries to predict which of these 100,000 shoes. Once it identifies

with a certain probability that okay this is probably the 999 shoe, Then it shows you the details about that shoe and if your interest rate is in just order, so this is going to be the future of shopping. Next time you see those cool sneakers you like or cool t-shirts you like, you don't have to go to Target or like Reliance and then sort of try to find those things. You can just use object detection computer evasion and get those things directly online. The next one in retail and payments is fraud detection for online payments, so this is a huge industry all across the world.

Before I joined Google AI, I used to work with Google payments system and essentially what we did was that we tried to understand the behavior of the user and the credit card transactions action history of the user and predict whether it was fraud or not fraud. This is one of the sort of very big industries right now and I don't think it's going to go anywhere for the next decade or so all right. The last one that I have here with respect to the industries is healthcare and AI is making a big big deal in the healthcare industry. One of the major use cases that it's being used is predicting diseases from high-resolution scans so here you see on the left side is a scan of someone's retina. There is this disease called diabetic retinopathy which is very common across the world.

What it essentially is if a person has diabetes they have a much higher probability of getting some issues with the retina and that can cause blindness in the person. So usually the way to diagnose it is you take a really high-resolution scan of the retina and then show it to ophthalmologists and based on that they will predict whether you have high risk lowest or medium risk of having critical photography.

This was done by one of the Google research teams and they took the sample and showed it to three ophthalmologists and the responses were usually like one said this is a severe case. One said this is like a mild case and one said that there is nothing here and when we ran this image through ML model predictions, it recommended that it was an advanced case.

They then went back and tried to talk to a lot of ophthalmologists, showed this image and try to do some advanced analysis of the image and they eventually realize that this was actually an advanced case. So it's actually cases like this where machines can actually identify patterns much better than humans can ever do that and hence AI is going to be like a huge thing in not just diabetic retinopathy but also cancer detection and all sorts of like vision related use cases that you can think about.

So let's move on to the last part of the talk. So what is the future like with a lot of these industries but what does future entail the first major way in which I think AI will significantly impacting our lives is content generation. What I mean by that is voice text and video generation, so right now if we need something let's say if you want to curate a news or if you want to have Shahrukh Khan's voice. We have to sort of type the news ourselves or we have to record Shahrukh Khan's voice specifically over a microphone. What I can do and the research is almost at the level where this is possible. It can generate sounds of a particular person by itself where you don't really need to record a particular sentence from Shahrukh Khan. You can just generate movie dialogues or news for example without even having him say something.

Similarly AI can start writing stories, poems, news articles, movie scripts and so on. The generation is sort of for text and voice is going to be a big thing. I have a quick example.

Here is a nice piece of technology called Xinhua, which is a news agency in China. They basically launched the first news anchor and it is actually very fascinating to see. I'm just going to play the video.

"Hello everyone I'm an English artificial intelligence anchor. This is my very first day in a single news agency. My voice and appearance are modeled on Zhang Chao, a real anchor with Tsinghua. The development of the media industry calls for continuous innovation and deep integration with the International advanced technologies. I will work tirelessly to keep you informed as texts will be typed into my system uninterrupted. I look forward to bringing you the brand new news experiences. So as you can see live, everyone as you can see was trying to do multiple things here. One was generating speech from the text that was given to it. The second it was generating the face of a particular anchor in China that he was talking about and it kind of felt very real. This is still early stages but I'm pretty sure that they get to a level where it will be hard to differentiate between a real anchor and an AI generated.

The second area where I was going to make a big dent. I think in next over five to ten years is personal assistants and friends we already have seen things like Alexa and Google assistant and Siri but I think these are going to go to the next level in the next five to ten years. I'm not sure if you followed the Google i/o keynote last year but this is an example of how Google assists was able to actually call a hair salon and book an appointment for women's hair cut to the client. On the left side as you can see it was sort of a really fascinating conversation and I don't actually noticed around like first. It is sort of a really really hard thing to get into AI so we are actually getting there where AI can speak very fluently with humans and potentially. We could pass during testing like a few years if not a decade.

The other area related to watch assistants and virtual assistants, people will start having a lot of virtual friends. An example here is an app called replica it's basically a well being sort of an app where you can just chat with replicas about general things, ask about your emotions if you're stressed, you can just come and talk up to replicate it and it is actually surprisingly good. I've tried this one and I'm actually really impressed with replicas. So more and more of these apps and these technologies will start coming in the next five to ten years and I wouldn't be surprised if people have multiple virtual friends with different personalities that they can. Talk to and obviously the challenge here is that the humble human conversation will be significantly cold but yeah we probably have to wait and see how that turns out.

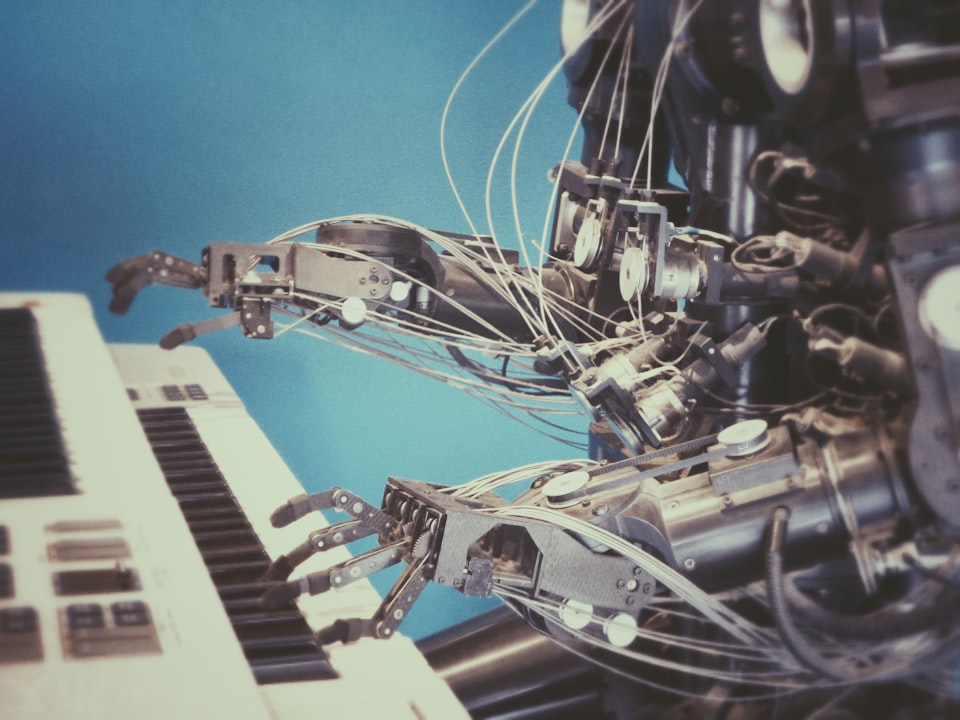

The third area where I think is going to be huge and is going to disrupt the way we live today is Robot companions, so by that I mean that we have robots today in our houses so you may have like goombahs, which are like cleaning robots who will go around the house and clean the house. You have washing machines which are in a way robots. They don't move, they just clean clothes at a particular place but what I mean by robot companions here is they'll be much more mobile and much more interactive robots.

So one super cool example that I've seen about this is a pet called AI go. It's basically a robotic dog and it has made a lot of headlines in the last few years.

I was totally fascinated and surprised and had a bunch of different kinds of emotions after I saw this robotic dog. But this sort of I think second or third generation of one. It's actually super cool so if you kind of go and pat it on the top of the hair, it'll kind of react in different ways and if you kind of massage, it will react in different ways. So this technology will be kind of blue or up over a period of time and you will see more parrots and more house cleaning robots and things like that within homes in the next few years. If you want to buy, this is like $300. I think right now it is very expensive. I think the other way the robots will start helping humans is by working with humans side by side. This is already happening, so you may already know about robotic arms that are installed in let's say huge cars, factories and things like that. But the revolution that is happening right now is where the companies are basically creating development kits which you can take and kind of customize for your needs. So if you have like a small assembly line you can customize your robots around like how they will actually interact with human beings, how much interaction you want with them, how much voice capability, how much vision capabilities they should have, and many things. So this thing is actually getting huge as well if you want to check out how robotics is actually doing some of the cool stuff in this area.

The last one and probably the most interesting one here is intelligence augmentation. So there are a lot of predictions of how AI will affect us in let's say by 2040 or probably like in twenty to thirty years but my take is that AI is going to get super intelligent and we will have something called an artificial general intelligence which can basically do a lot of tasks together.

So it will sort of be human level intelligence. The issues right now is that the ML models or the AI is that we can do very specific tasks so you can train an AI or ML model to identify cancer. You can train an ML and AI model to detect objects. You can train an AL and ML model for speech recognition. But these are sort of three very different animal models to achieve human-level intelligence. We need to have a single big model which can do a lot of these tasks at once and that is a very complicated task.

The way researchers across the world are working on it, if we are able to crack that and it will probably happen in the next 1-2 decades. Now imagine if that happens, we will have an AI that is super intelligent and at some point of time it will surpass the intelligence of humans. So to keep up with the AI what essentially we'll have to do is we'll have to add artificial intelligence to human brains. So it can be invasive like we see in sci-fi movies where you kind of embed the chips and connect it to neurons. Somehow which I don't think anybody knows the answer to at this point of time, it could be just external where you have headsets or like some sort of cap or something like that which you wear and it kind of directly transmits information into your nerves. It could be either of those. I think it will probably be both of those and based on the predictions from some of the most famous futurists. I think this will probably happen somewhere between 2040 and 2050, we will start having more intelligence more artificial intelligence into our brains so just to give you a sense of how things are going to look like in the next 50- 200 years. When we had census in 1970 census, the questionnaire that the government asks us to fill in to get a sense of how people are doing in the country for example the census of the 1970s was like oh how many people are in big houses. The census of 2100 is gonna look something like this. People will not care about houses, rooms or anything like that. People will care about how much artificial intelligence you have in you, so this is like one of the questions that will be there. So the options there could be like "hey I don't have any implants, I'm like a true human being and I have linked implants only", " I have intelligence augmentation which is external only" and then finally I have embedded intelligence which is basically chips inside your brain apart or somewhere and you're basically a cyborg. So I think we are moving in that direction and then we should see significant advances in this field of invasive and non-invasive technology even in probably the next 20-30 years and I think we may like it and do it. For example it may be our choice or if you don't like it, I think it will be a necessity because if somebody doesn't like to do it then they'll just be left behind everybody becoming super intelligent and then somebody is not getting artificial intelligence in their brains and they'll just sort of be left behind, so people will do it either by choice or by necessity but they will have to do it.

I think the last bit of wisdom or thoughts that I want to leave with is I think from 1975 to 1995 the focus of the world and the technology industry in general was to pick a service and make it digital. So if you remember the railway system for example in India, we moved to all not online like digital tracking at that part of, I used this huge mainframes and everything was sorted. The computers were started to store, from 1995 to 2013 or 2015 was the era where everybody was trying to pick a service and make it online. So was it shopping that Amazon did? I took it online or was it Uber where they took like cab service stuff and then took it online and I think in the next 15 to 20 years it's going to be primarily AI and it has already started.

I think since 2013-2014 that timeframe is to pick a service and improve it using AI and I think this is going to be the recipe for the next 10000 startups in the world. I think at this point of time I probably have convinced you that is the driving force of our era and it is changing our everyday things.

So if you are interested in if this or if you found any useful information I would request you to start exploring AI and thinking through problems and how you could use AI and ML to solve those problems.

That’s it from my side. Thank you!!

Member discussion