Enabling speed and confidence in software development with testing

This is the written version of the talk given by Sarthak Vats at the Git Commit Show 2020. This talk includes current challenges teams face—management, technical, strategy/planning, cultural, etc. It also includes anti-patterns, suggestions from the vast experience of the speaker, and takeaways on mindset, planning, prioritization, technologies, and management. The aim is to guide teams to adopt a culture of speed with quality.

About the Speaker

Sarthak Vats is the VP of Engineering at Noon. He has experience in building engineering systems, processes, and teams in B2B SaaS and B2C startups. He is passionate about the system and organizational scale.

What is speed? What is confidence?

Speed is defined as the rate at which something happens or something is done. Confidence is a state of feeling certain about the truth of something, which means that you are certain about something. You don't have any fear or you're not nervous about something when you are confident.

We want to achieve both speed and confidence, but is it possible?

Let us understand the dynamics in which a software team functions.

In any software development project, be it a startup or be it in any enterprise, three parameters typically influence us:

- Schedule

- Scope

- Quality

The schedule is the timeline or the deadline. The scope is the amount of work that you need to accomplish, the number of stories or the requirements, etc., but that is the scope of work that you need to know and finish up. The third is the quality of the delivered release; it must be a deliverable product. It takes an effort to achieve that.

We can only achieve two parameters successfully at a time. The third always happens eventually, meaning that out of the three parameters, you can strive your best to lock down two parameters, but for the third one, you need to strike a balance with any of the first parameters first to lock down parameters.

For example, we define a schedule that this is the day we want to release, then we define the set of features, and then start working on it. But in that race to finish up that set of features with quality in that timeframe, often the quality gets compromised because we focus more on the time and end up compromising on the quality of the product.

So, what is it about quality? Why is it so hard?

It is the nature of software development that it is an intellectual creative process. For example, writing an editorial article or doing some research project takes time. It needs to go through multiple iterations of improvements before it gets perfect because it's a creative thing. We think about it, we do it, and then we match it with whether what we thought it turned out to be the way we wanted it to be. Being specific to software, unless you see something or when you're working, you don't strike and connect with it. So, without engagement, there can be no adoption or feedback, and it becomes a theoretical exercise. To make it practical, we have to develop it and release it.

Many times, we get caught up in balancing three parameters: scope, schedule, and quality, and quality suffers as a result.

Why is it important to achieve speed and quality?

The criteria for achieving speed and confidence come down to how we balance our pace of work and the quality of the software that we are developing. It's important to establish why it is that we require it as a developer and why we should care about it as a tester or as a product manager. It is important to multiple stakeholders. One is customers, who want new features that work flawlessly all the time so that we can depend on them, use them, and trust the product.

Second is business, for any business, speed is important because they want to move fast and grow fast. They want business pressures like any revenue timelines or GMV targets or competition pressure, etc. Many startups in our situation have limited funds under which they operate. So, the longer they work, the greater the economic impact on them.

Then for a product, software development is an intellectually stimulating process that has an impact on the product itself. So, any product you see today is continuously improving. Even Google, even after more than 20 years of development, is still progressing because new situations arise and new demands are there. So we need to continuously enhance our product.

Forth is the engineering team. For them, it's very important to establish this balance. They will be able to create very efficient working models and a healthy engineering culture. and they can stop wasting their time on avoidable defects, rollbacks, etc.

What if we don't get this right?

There will be an impact on the organization and engineering.

The impact on the younger organization is that they would miss product feature launches, their sales timeline, and face financial challenges to support the staff that is being hired. Poor quality of the product can lead to a situation where customers start abandoning the product, which makes the money of the company go down the drain. There will be increased pressure on the customer support team because the customers are facing issues and they have no end. So, those teams face that pressure, and it becomes an impediment for the organization to compete in a competitive environment with other companies.

On the other hand, engineering faces an impact by ending up in inefficient situations where a lot of time is getting wasted doing unnecessary stuff. This led to blame games, the culture deteriorating, and people stopping enjoying working and quitting.

Why are we not doing this already?

One of the reasons is that the software industry is relatively young. It started nearly 50 years ago or so, before software or computers were part of research or very top projects. It has become mainstream. The nature of software is that it produces technology, and technology is disrupting at a very fast pace. We do not need a conference hall to have shows or meetings. So technology is changing the way.

However, if you compare that to the way other industries are working, for example, mechanical engineering, medicine, accounting, or maybe the food industry, such rapid changes haven't been happening in those industries. Because we are a young industry, knowledge will be passed down from one generation to the next while changes are unavoidable. Change is bound to happen, and embracing the change and being flexible to adapt to the change have not been thought through as the essential ingredients for that engineering team.

So, how can we improve our testing?

We define the parameters under which we work. Speed, as we established earlier, is the steady pace of software development that our current team can sustain over a while. It's just not being rash or crazy. It's more important to have a sustainable pace that you can maintain without your team having burnout all the time.

People wouldn't like to work; they would like to have a work-life balance. So, we must establish an environment where there is a steady stream of quality that has to be met and has to be evaluated from both a usability and technical standpoint.

What are we trying to optimize on a bigger level?

What we're trying to optimize is lead time. It is the time when the business gives you the requirement and when it is made available to customers.

Let's focus on the DTR cycle: develop, test, and release.

development, test, and release. These are the three phases. Development is something unrelated to intellectual or creative work and is sort of a manual thing. Testing is done manually as well as there are ways by which people automate and release, generally through DevOps or SRE.

How can we improve our testing?

There are four things that we need to get right:

- Adopt the right orientation

- Adopt the proper culture.

- Adopt the right strategy for automation

- Do automation in the right way.

So, adopting the right orientation has four points:

- Prevention over detection

- Automation

- Collaboration

- Perseverance

The first and the very foremost is prevention over detection. Do many engineering teams ask what the job of a tester or QA is? Is it to find bugs? Best practices are not prevalent because of a mental barrier, which has limited the adoption of the right strategy for the right orientation. The job of a tester is more to prevent bugs than to just detect them.

Software is created to cut down on the manual work of the rest of the world. The software industry in itself continues to function, and they are not applying automation to themselves. We are creating systems, but we're not applying that to ourselves.

Collaboration is important because software development as a whole is not an individual game. It's not about one person doing an excellent job; it's about all members coming together on a mission to achieve a goal and contributing in their best individual capacities.

Perseverance takes time, stamina, patience, and hard work. Improvement doesn't happen overnight, it takes time because we are all intelligent people. And it takes time for us to change our habits, current thought processes, and understanding of change, which will lead to success in the future.

Adopt the right culture: Quality is everyone's responsibility, not just someone's job. It's not that only the tester is responsible. It's developers, product managers, or even business people, or DevOps. They are all equally responsible because we are all part of the same team. In some companies, developers are responsible for both development and testing, and in some teams, they have separate testers. For us to adopt this culture, it is very important that everybody raises their game and is more conscious and more responsible towards enabling quality. It is important that the developers raise the quality of the first cut that they release, as for internal testing, first got an alpha release that we call it. So one of the crucial things to enabling success in this is the adoption of test-driven development by developers. It's a misconception that it slows you down.

But the truth is, it doesn't slow you down. It requires an initial investment to develop a style of coding that is more congenial to the overall quality of the software. But once learned, it just becomes a part of a habit.

If you have to learn any language, you have to put in some time. It doesn't say that it will slow you down because to give a developer comfort in any language or any new technology, you need some time. That is a part of our job because we are learning every few months, every day.

Another point is, on the collaboration side, it is like practically seeking feedback, which helps the developers understand what could be the possibility or what the cases they should take care of. If they are aware, they will be able to write better and code better because they will know all these cases they need to be mindful of while writing code. Testers should understand how things are implemented because if they don't understand how things are made, they won't be able to identify where things fail.

So investing in upscaling and educating your testers would be so helpful.

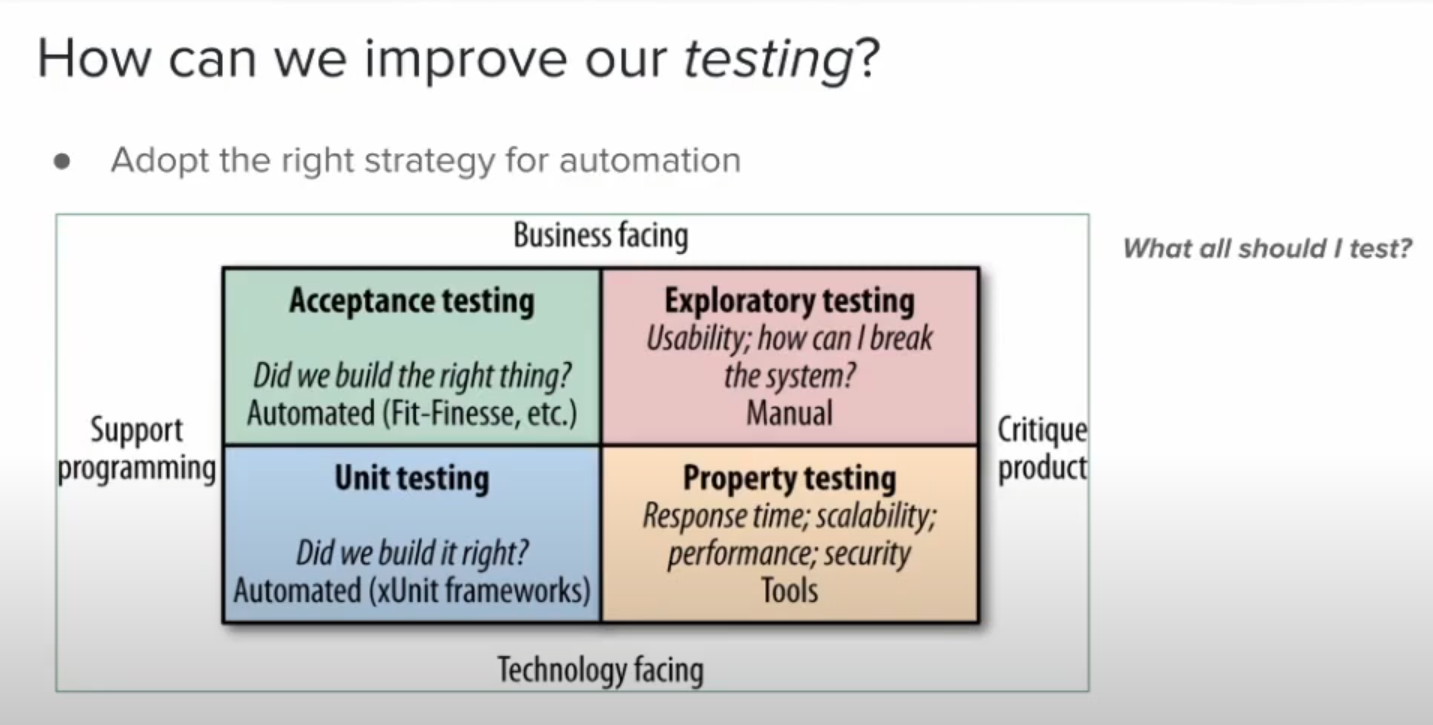

The right strategy for automation: what should I automate? But before automation, first understand that, from a manual standpoint, what are the things that we are testing and what is the scope of testing? The testing quadrant idea, which was initially proposed by Brian Merrick, you know, in 2006, where he talked about four quadrants of testing and moving in a clockwise manner should be the sequence of how we should attack them.

Unit testing and acceptance testing can be targeted with no programming. We can automate them. The other does have technology interfaces, but they have tools that can help us do that and one is business-facing.

How do I plan for automation?

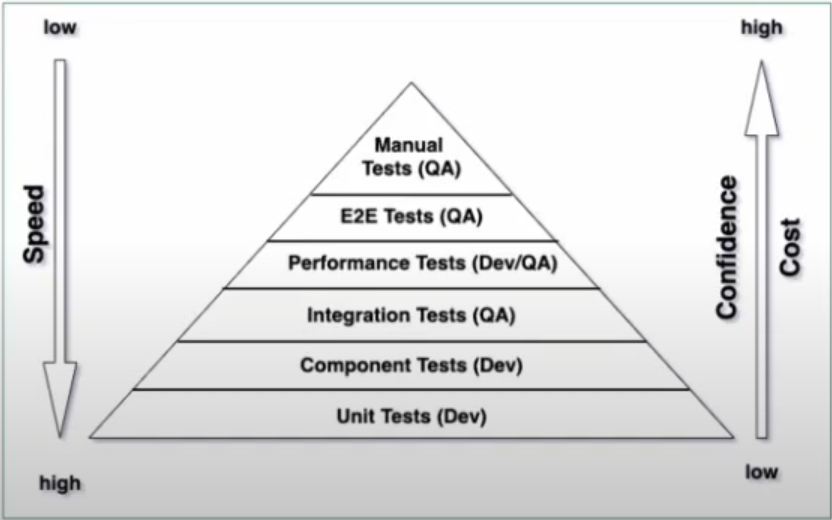

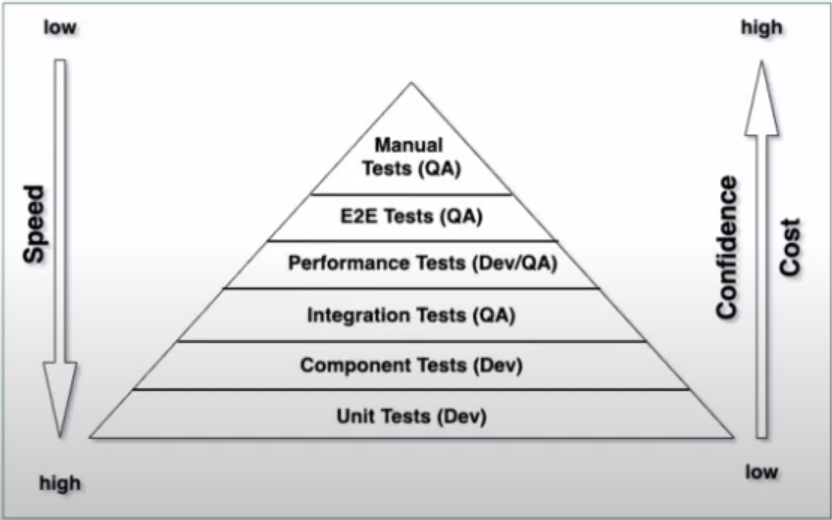

This is a test pyramid, a concept that was originally proposed by Mike Cohn, who introduced this concept somewhere in 2004 or 2006. And he proposed that we divide the kinds of tests that we do into different categories and target them so that our level of confidence becomes gradually high as we spend time building these layers into the application.

Unit tests are written as your unit tests, which are at a functional level.

Component tests are at a particular module level, a service level, or a microservice level, where you want to test whether the microservice is doing what it is expected to do by creating by stubbing the dependencies, the external services, and the dependent microservices.

An integration test means that you have your module and microservice, but how does it play well within a larger ecosystem, because any product or any software system is a combination of multiple components working together smoothly, and how does any change impact the other components, which are connected?

Integration testing is performance testing. We have put dev slash QA because different teams are oriented differently. They should be under the ownership of developers because it gives them very important feedback and insight around whatever code that they're writing, whatever designs that they have developed, whatever design choices that they have made, and how it impacts the performance. Conducting the test is important, but taking feedback and improving the performance is another thing. So, if the same person is enabled to do the test as well as collect the feedback and change the code, so that the next time the test we do is neat. High performance is a very easy and convenient learning experience.

How should I generate my test data?

Because unit testing is about what we're trying to test, which is that there is a block of logic or the input is an event or data and the output is another form of data which is coming out. The logic has some state and original data, and it takes a combination of input data and its reference data, applies some logic to it, and then gives out output data because the component is applying logical analysis or logical operation on input data and its reference data. If we mutate the reference data, we can create different scenarios and different logical situations for the material for the application, that can lead to different responses.

So, it thus becomes important that we can create multiple references to the test data.

Do automation the right way: There are some behavioral expectations from the automation suites, such as: developers and testers should be able to collaborate; should cover functional and non-functional aspects; should enable developers to get faster feedback and run successfully in multiple environments.

Technical expectations include writing clean code that should be maintainable in the long term and there should be dynamic coding.

But there are many challenges that we face while doing this.

- People challenge

- Process challenge

- Technical challenge

- Knowledge challenge

One is a people challenge. It includes the fact that developers think their job is development and testers think their job is testing; developers are given more value than testers; and both of them don't collaborate due to friction between them as developers are reluctant to own up to their development quality.

The second is the process challenge. In this, the teams tend to be more technology-oriented rather than mission-oriented. Generally, automation suites are consumed by testers and not developers; they are executed from a tester's machine and not from the build server.

Then come technical challenges; development code or application design doesn't support the ease of achieving test automation. The suites aren't designed to run on multiple target environments and with various test data sets.

Another challenge is the knowledge challenge. The testers don't feel confident with their programming skills and don't fully understand how the application is developed. They don't know how to measure the effectiveness of their automation suites. They don't have proper test case repositories and documentation.

How to start the journey?

It's a long-term game, so it requires management commitment and support. Promoting a culture of collective responsibility for quality can also help. Making small progress that adds value, is usable for daily runs, and collaborating with developers to enhance the automation suite's quality and effectiveness can work.

Build, Measure, Learn, and Evolve.

For more such talks, attend Git Commit Show live. The next season is coming soon.

![OS for Devs - Ubuntu vs nixOS vs macOS [Developers Roundtable]](/content/images/size/w750/2023/12/IMG_20231216_204048_584.jpg)

Member discussion