Grand unified theory of brain function, can data science lead us there?

This is the written version of the talk given by Arpan Banerjee at the Git Commit Show 2020. In the first half of this talk, Arpan discusses some aspects of the evolution of such theories.

In the second half, Arpan discusses how modern techniques have allowed us to peek into the dynamics of brain computation and the exciting new developments that have thrown at us a deluge of empirical data that can potentially resolve several unanswered debates in human cognition with specific exemplars from our work in multisensory perception.

About the speaker

Arpan Banerjee is a scientist at the National Brain Research Centre. He has been working there for the last 7 years, and his primary interest is in human perception. He shares facts about how data science and neuroscience can help with the understanding of human brains and how far we have come.

Content:

- The history of the idea of grand unified theory of brain function

- Insights from multisensory perceptions

The history of the idea of grand unified theory of brain function

Starting from history, let's see these two scientists who came up with this noodle drop train. One was Camillo Golgi. Golgi bodies in the cells owe their name to this man. He came up with a unique way to stain different cells and see them under the microscope and observed the following structures: where we saw the brain cells in a tissue can be structured like this virus, now known as the neurons. These are individual brain cells. Golgi proposed that these neurons are some sort of connected, much like a bedsheet connected by different kinds of thread. The brain is made up of continuous matter like this.

And this theory, which he called the reticular theory of brain function, holds that our thoughts, actions, and everything else are governed by the activities of this continuous sheet. So this is what Golgi was referring to when he mentioned the Grand Unified Theory.

Then, Santiago Rahmon y Cajal was from Spain. He was inspired by Golgi's techniques. He proposed that these be individual cells rather than a continuous sheet. They are individual functional units of the nervous system, meaning the cells themselves that are computing information in the brain. So this is the concept of this information being computed in the nerve cells.

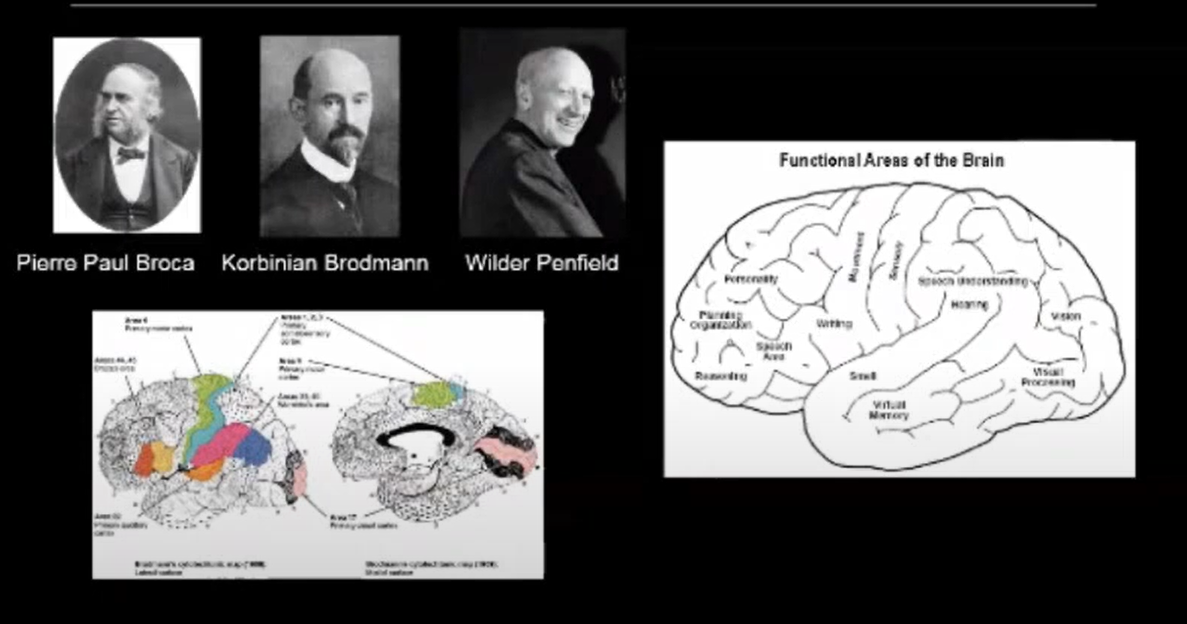

In the meantime, different people like Paul Broca, Broadman, and Wilder Penfield were looking at surgeries with people who had lobotomies, where they could study the brain function in detail. Different kinds of brain areas can be attributed to different kinds of functions. So, the idea is that different areas are kind of segregated in the brain, which are kinds of modules that processes some kind of information.

Then there was also Arthur Sherrington, who talked about how these nerves, which are the cells that Santiago Rahmon y Cajal talked about, are connected now, and the connections can be both excited and inhibitory, and at the point at which there is a connection, he can turn them into the synapse. The synapse is important for the propagation of these signals. He talked about how the information might be integrated over the group of assembled cells.

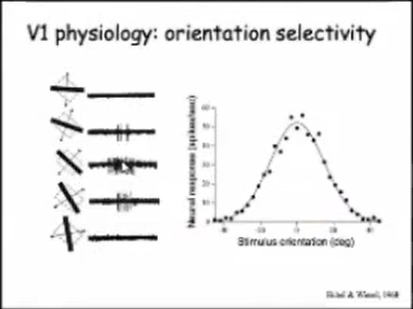

Fast-forwarding to the end of the Second World War, there was an experiment by Hubel and Wiesel. They looked at the animal cortex, the cortex of a cat. When the anesthetized cat was forced to make its eyes open. In a particular orientation like this, at 45 degrees from the baseline, there is a maximum activity or firing of a neuron. These are known as action potentials, binary zeros, and ones. There is a line and these bars represent these action potentials. If something is tuned at a particular angle, there is more activity in a particular cell. If you plot down the stimulus orientation in terms of degrees, and to the function of the neuron fewer points, which is the rate of firing of action potentials that are happening, you can see there is a peak at zero, which means this is the peak that this neuron is particularly firing, and then you can do the same curve for different neurons and understand what all the cells are doing.

There was enormous development in the field of computer science at that time, and people were asking what the definition of a code was. This language leaked to the neuroscientists, and then it was understood as code. We call these some kind of neural code.

A code in a machine implies that the code is fixed, but in the brain, this code changes with context and learning. It can be changed with real-world environmental facts.

So, in a way, coding may not be the best way to look at the entire brain's information processing.

How does information get integrated?

These aspects were missing when the segregation theories were being proposed, whereby brain areas were understood as segregated into different structures.

For example, you would remember me as a kind of a composite object of my speech, my voice, and your visual representation of me as a combined whole. You don't identify me as this separate split thing.

Where is this integration in the brain happening?

There is an entire stream of areas along pathways that are involved in information processing.

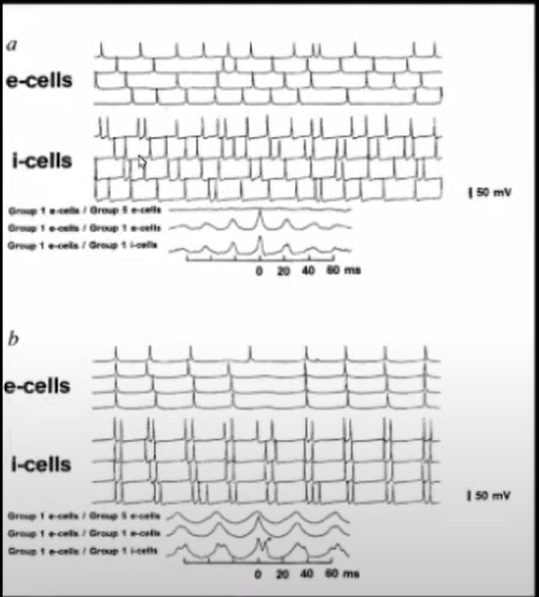

So this is one experiment done on rats. And this experiment is quite interesting because what they did in it was a paper in the journal Nature, which is a very important journal for scientists. So what they are doing here is that they are looking at individual cells. They were getting recordings from inside the cells, which are represented here.

And they were getting these basic field activities, placing the electrode on the top of the surface of the cortex of the animal. Now, what they see is that these wave light structures are represented, like the trough of these waves, by the more activity of these individual cells, and these are excited and inhibitory cells. What you get from this picture is that when everything is aligned together, you get robust activity at the surface of the brain, or maybe as we measure human eg (eg is very much a technique similar to ECG, which is done on the heart, but the difference being that it is now being done on the brain to understand the brain signals), from outside the brain, when this alignment of these activities breaks down, you don't get that much robust activity, these wave-like patterns outside that sort of, we're far away from where the action is happening.

So, the nervous system organization is organized in a way to synchronize these activities across different levels to give you activity at a superficial level, which you can observe.

So, taking this idea of integration further—and this comes from these ideas of physics from self-organization from nonlinear dynamical systems—you can use the concepts of synchronization to understand the coordination of activity of large groups of SMDs or the collective behavior of this system using non-equilibrium thermodynamics. This can all be a kind of coordinated action, and this can be reflected in the coordinated activities of the brain.

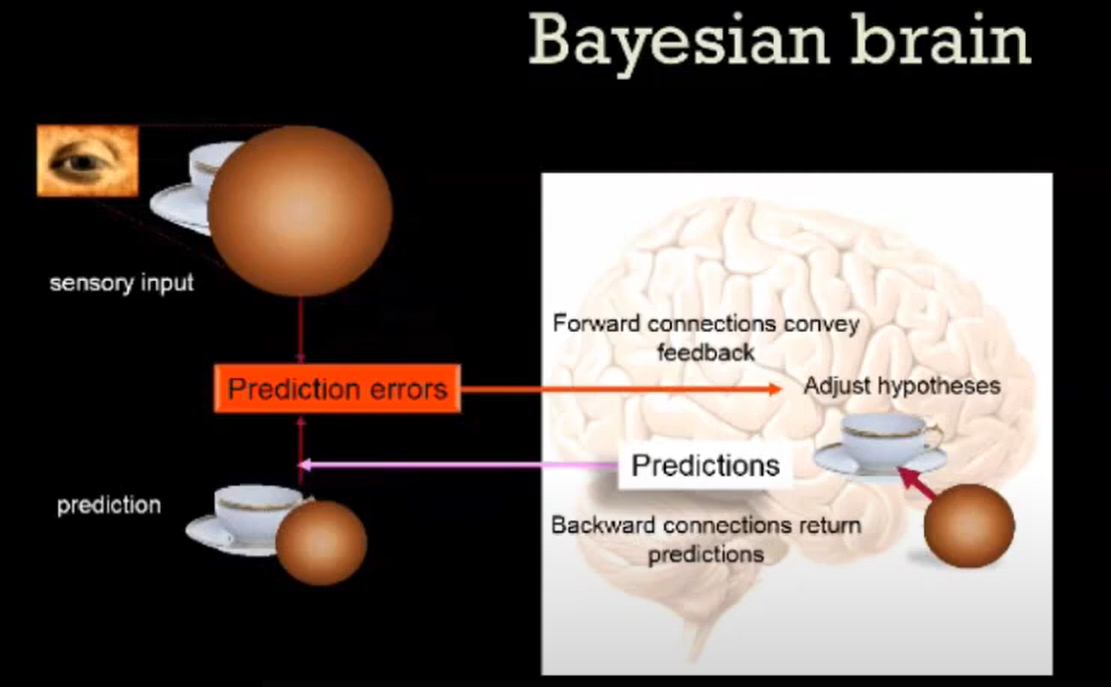

From the perspective of computer science and information theory, this idea of brain processing can be understood in terms of these Bayesian principles.

So, the Bayes theorem in probability talks about how you can sort of identify if you have a response and if you have a stimulus, which is essentially very stochastic variables. They can be sort of related to certain sorts of conditional probabilities, like stimulus conditional on the response and response condition and the stimulus. The stimulus condition and response can be something you call prior learning.

So, for example, as we grow, we make associations with the stimulus. So, we have a stimulus, we have a response. We have learned what each one of these responses means for one stimulus. So, our knowledge of the stimulus is conditional on this sort of response. And we can form a good gauge sort of idea of this whole stimulus space, which can be our priors. So, if you have a response, our brain can also predict what the stimulus is.

Why is this important?

So, imagine this is the eye. This is the retina. The retina is getting the images from outside, but it's translating them into some sequences of ones and zeros for the brain and the way the brain understands them. It can't see the outside image, but it can see the ones and zeros that we are seeing. When you see these ones and zeros from these earlier levels, you have to decode what is there outside.

How do you decode?

So you want to understand how these ones and zeros are related to your previous ones and zeros, the ones and zeros you have accumulated over your childhood and into your adulthood. So now the question is, what is CPR? In a way, the brain is always trying to predict what is happening. So if you think the brain's purpose in life is to predict, so that you can survive your different environments, then you have to make a match between that prediction and what ones and zeros are coming. And if there is a mismatch between this prediction and what ones and zeros are coming, then I need to remember this is something new.

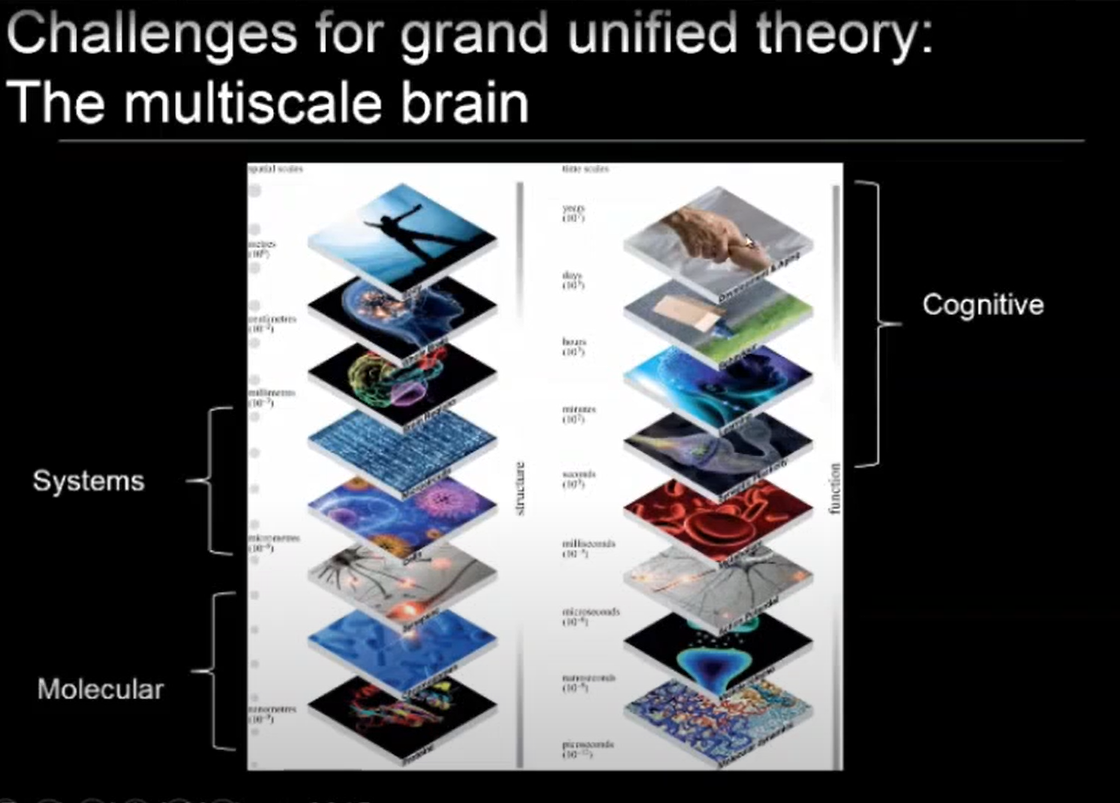

What are the different kinds of challenges that a multi-sensor or a multi-scale brain has and what does it mean to be breached?

For example, you have different levels, different structural levels, and different functional levels. The structure level starts from proteins to chromosome synapses, cells, microcircuits, brain regions, the whole brain, and behavior. These timescales are like development and aging spans over different years of aging life, whereas these protein scales are this reversible allele happening in the nanoscale, and you have to have devices that age in scale.

So now you can think about how we study this kind of behavior?

We can talk about learning, we can design various types of sophisticated microscopes, we can do live cell imaging, and so on. So we have to bridge these different scales. And that is one of the challenges for any grand unified theory of brain function. And this is something that has not been resolved yet.

Insights from multisensory perceptions:

What is multi-sensory perception?

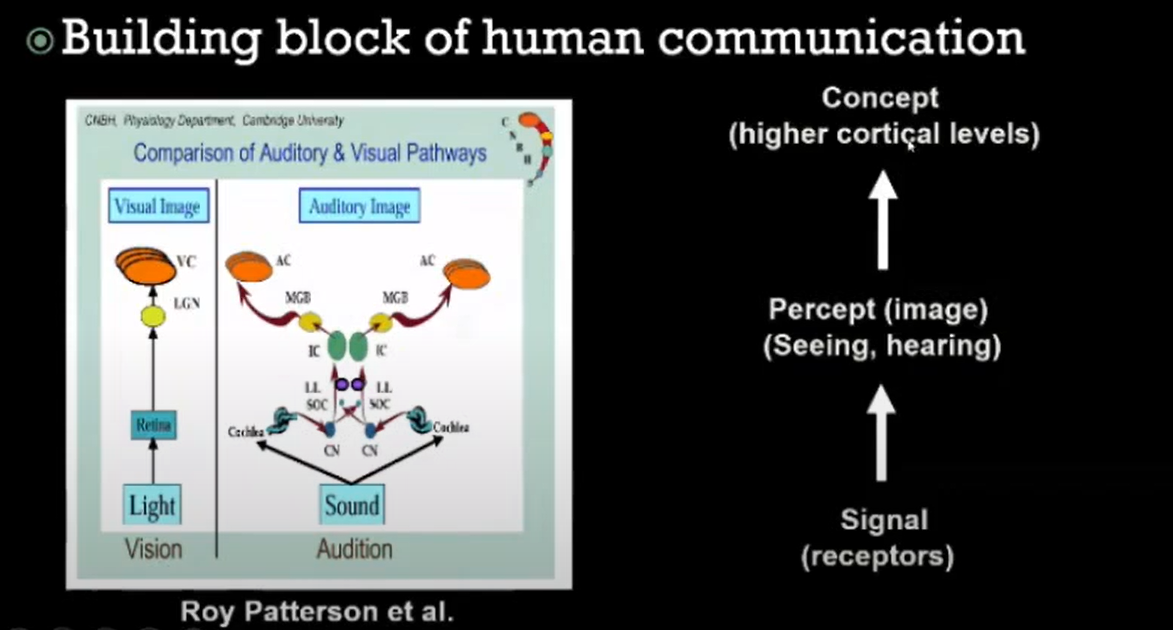

For example, let's talk about different sound visions and auditions. As light falls on the retina, a visual image is formed in the visual cortex. And then you have this audition where you have the sound falling on these ears, whether they are structures like the cochlea, which does some kind of a Fourier transform. On the signals that are coming in, they can send the signals again. This code is the neural code for the auditory cortex to the right, shaping your auditory perception. So, across this, you have signals and percepts. And what you have to develop is that you have to develop concepts. So, what we think is going on in the brain is at the level of the concepts.

So, conflicts in this perception can provide us with the gateway to understanding brain processing. For example, this is a sort of a static stimulus. These are white dots, which are interspersed with black dots. But when you look at it, it gives you some kind of a dynamic, sort of an illusion.

So, there are conflicts between perception and concept when these illusions arise, and this is a way to study the brain in terms of its activities.

Multi-sensory perception is relevant to understanding speech because speech is very much a multi-sensory phenomenon. For example, it is relevant to understand speech because speech is a multi-sensory phenomenon. And the aim of this is for different systems to interact through different networks, like auditory networks and visual networks. When we are talking about speech perception, it is something that is the most fundamental form of communication before Facebook or Twitter.

How do different auditory and visual systems interact with each other when you are having these illusions?

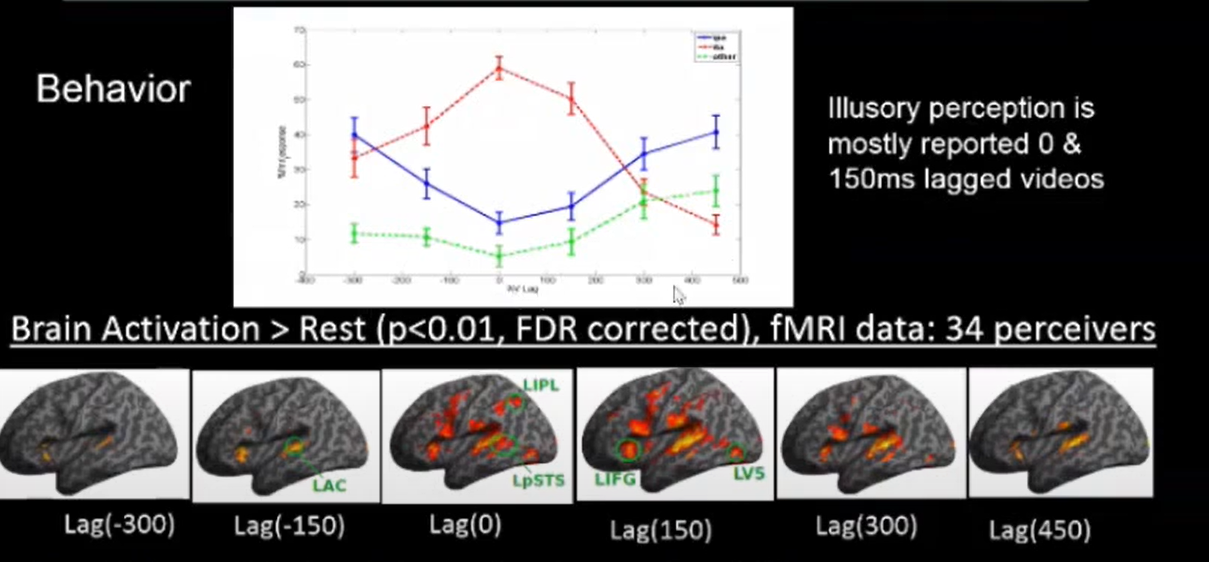

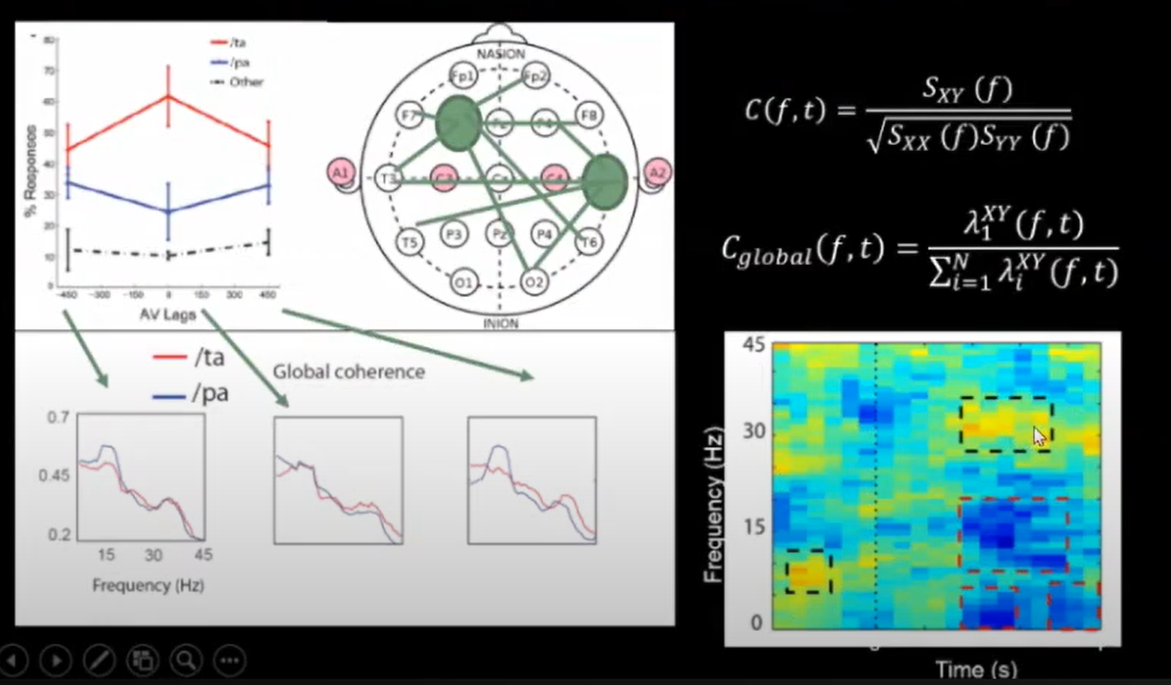

This is an fMRI experiment across the different age ranges, and we had a total of 55 volunteers. We observed in this red line here that the subjects reported that they were hearing during appropriate button press boxes and the red line tracks the response of these. When auditory and visual stimuli, or other streams of information, are completely in sync with each other and have zero lag. There is an increase in this perception, the illusory perception, which decreases as we increase or decrease this auditory AV lag, which is our auditory visual lag. In this case, the auditory proceeds to the visual stimulus here, and the visual stimulus proceeds to the auditory stimulus. And we look at these brain areas, and there we find that this is the auditory area here. So, across all these different lags, we see activity in the auditory cortex is also somewhat unchanged. There is auditory cortex activation, and visual cortex activation is also present across all these legs which are here. So, the visual cortex is here, but interestingly, there is this area that is known to be a multi-sensory area, meaning they are active only when the presentation of multi-sensory sounds may be visual, auditory-visual touch, or auditory touch kinds of signals.

Neurons in these areas are active when both of these sensory streams are present and strings are present. These ideas seem to be more active. The activity is directly correlated with the illusory activity here, which means that these so-called multi-sensory areas do not process the stimulus per se, but they are also doing something related to the perception of the stimulus.

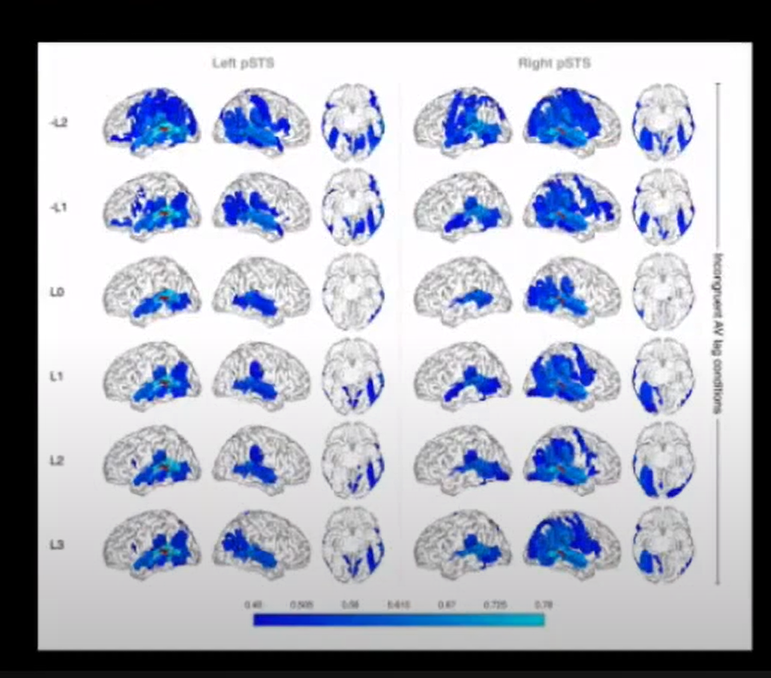

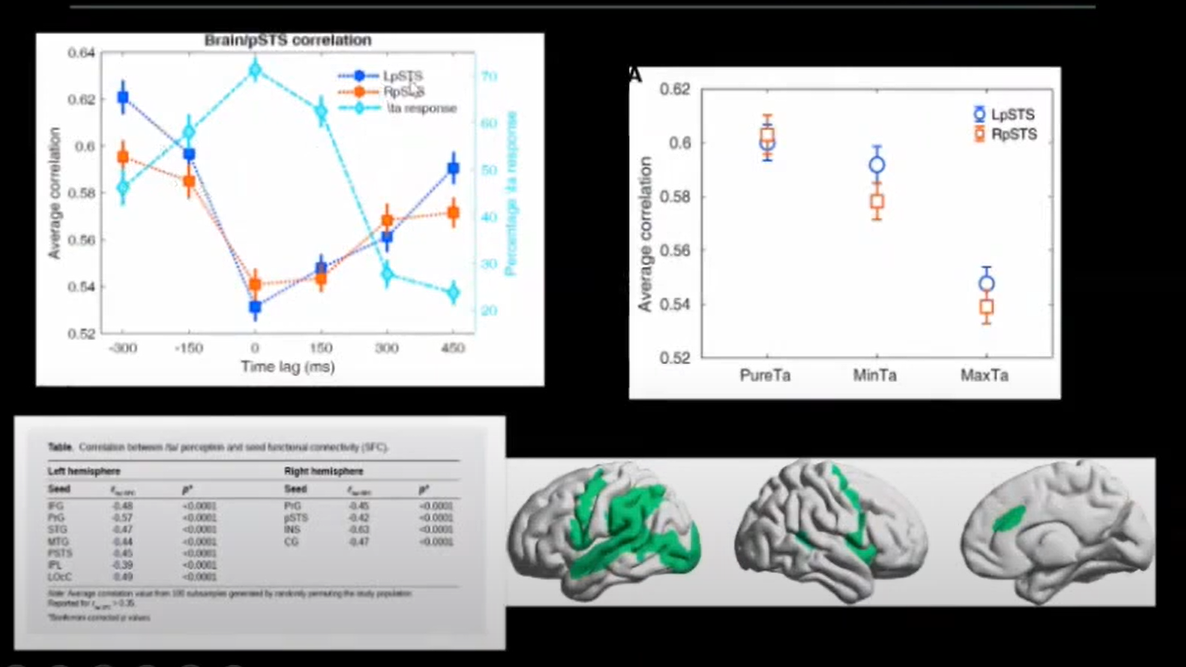

Segregated brain areas which are physiologically different are separated, they are active, which we find or they are more active during the task when there is a particular illusion. This is a long story short. To understand the integrative processes, we looked at the Pearson correlation. With the Pearson correlation, you can compute between any two-time series and it gives you a measure. It's a mathematical measure of the CO variable variation of signals across two-time series. Now, if I put a seed which is in the red spot here, and I put this correlation of these into the seeds for correlation with the entire brain, and I find out the statistically significant correlation, these are the areas that seem to be connected. So, all these areas are co-varying with the activity of each of these areas. The network decreases, and the size of the network decreases as you go towards zero lag, which is also close to this when the illusion was happening.

So, at the stroke of the illusion, it seems that the network that was present in the brain is suddenly disappearing in a way. There is more activity in a specific set of brain areas. Integration and segregation are both operated at the same time. So, in this way, finally, mathematically quantified by taking all these average correlations of the seeds of the seed correlations from the STS across the entire brain.

The blue lines represent the correlations done in the left hemisphere. There are two hemispheres in the brain. Then the red lines are the average correlations across the right hemisphere. For both hemispheres, the correlation is decreasing. This is the brain correlation along with the lab. This is the behavior. This is a figure that is drawn from the behavior that is coming out of the brain dynamics and how nicely anti-correlated they are. So, when there is an activity in this area of PSDs that is increasing, it seems the PSDs are decent and are desynchronized further from the rest of the brain. In a way, this decorrelation or desynchronization is something that defines us as human beings.

We repeated this experiment using eg because fMRI has a bad temporal resolution in the order of seconds, but our brain dynamics unfold in the order of milliseconds. We looked at the eg dynamics of these different perceivers and the red perceiver and divided them into frequent perceivers who perceive and people who do not perceive the illusions are called rear perceivers. Let's talk about a measure called coherence, where you look at the spectral sort of determinants of this temporal coherence like we did in the fMRI, but you can do it very precisely now. A coherence map that is unfolding in time and represents any kind of rate of activity means that the brain is synced at a specific frequency, which is on the y axis, as shown in the bottom right-hand corner.

So, you have a map where you talk about the frequencies on the y axis. This is time. These dots are the times when the stimulus appears in the video. There is an increase in coherence in this gamma band, which is between 30 and 40 hertz, whereas there is a decrease in coherence in the alpha band. So, it means that the network which is communicating in alpha frequencies, in eg we talk about these rhythms, alpha is a frequency band which is the frequencies of which at around 10-hertz gamma is around 40 hertz. So, gamma coherence is increasing and alpha coherence is decreasing.

The theme of the talk is mastery.

When you say the word mastery, the one thing that ignites in your brain is individuality.

Everybody is not a master. Somebody is good at certain things. Somebody is good at certain specific things.

So, in a way, the illusion can be thought of as somebody perceiving the illusion while somebody doesn't perceive the illusion. We are all unique individuals. And what you are seeing is that the way the illusion, at least from the mastery of the illusion is concerned, is happening, the people who are perceiving the greatest amount of illusion have the least amount of alpha coherence at the basal level. So, when that alpha coherence can be easily broken by the stimulus, they automatically perceive the illusion. We did this in the sensor space, which is in the EG sensors. And then we can apply some kind of modeling tool and predict what is computed like it can simulate individual neuron activity and simulate the whole brain area activity and predict how this kind of evidence can emerge from the brain. We are exploring these ideas.

How can you create this kind of full-scale brain model, which you can then use to study synchronization and desynchronization phenomena? And this is what we call the neural mass model. We can apply these neural mass models and predict the dynamics are that going on in the source space.

The validation comes from other tools. This validation comes from direct tools that can be applied from the engineering sort of physics to understand the sources like this is a detector. This is a classic problem of electrodynamics in that a finite number of sources may be and there are a finite number of detectors.

So how do you go about these ill-posed problems of finding out the sources? It turns out that you can use the different knowledge of these brain folds smartly, which gives you a good guesstimate of where the activity is at the source level. And that source-level coherence will then give you some validation of your prediction of the model.

So it turns out that the auditory-visual cortex can be connected directly functionally or it can be connected via the multi-sensory areas. In the multi-sensory areas like the PSDs, for the rear perceiver, this connectivity is very strong, whereas, in the case of frequent perceivers, this connectivity is overcome by this auditory to multi-sensory and multi-sensory to visual connectivity. The auditory to visual connectivity is more in the red perceivers, which we see in these two plots here.

In summary, neural states are defined by states of synchronous oscillations in distributed brain regions. In a series of experiments with speech perception and visual attention, we have shown that de-coherence of brain rhythms is critical when cognitive load increases or integration demands rapidly become complex.

De-coherence or de-correlation is something that future studies should understand, and synchronization or desynchronization is something that can hold the key to formulating a new kind of grand unified theory of brain functions.

For more such talks, attend Git Commit Show live. The next season is coming soon.

![OS for Devs - Ubuntu vs nixOS vs macOS [Developers Roundtable]](/content/images/size/w750/2023/12/IMG_20231216_204048_584.jpg)

Member discussion