The death of the two-week sprint

The AI-Native software development lifecycle demands a change

For decades, the 2-week sprint cycle was the "sweet spot" of the Software Development Lifecycle (SDLC) for many engineering teams. It was:

- Long enough for teams to make meaningful progress

- Frequent enough to make course corrections if needed

- Short enough to maintain focus and avoid significant deviations from the sprint goal

Today, it is not uncommon to see teams releasing almost every day. If you're using Cursor, you must have experienced multiples updates every day and if you're a Claude Code user, you must have experienced updates every 2-3 days.

So what has changed after AI, especially after the latest LLM releases that are extremely powerful for coding tasks?

Changes in Software Development Lifecycle (SDLC) after AI

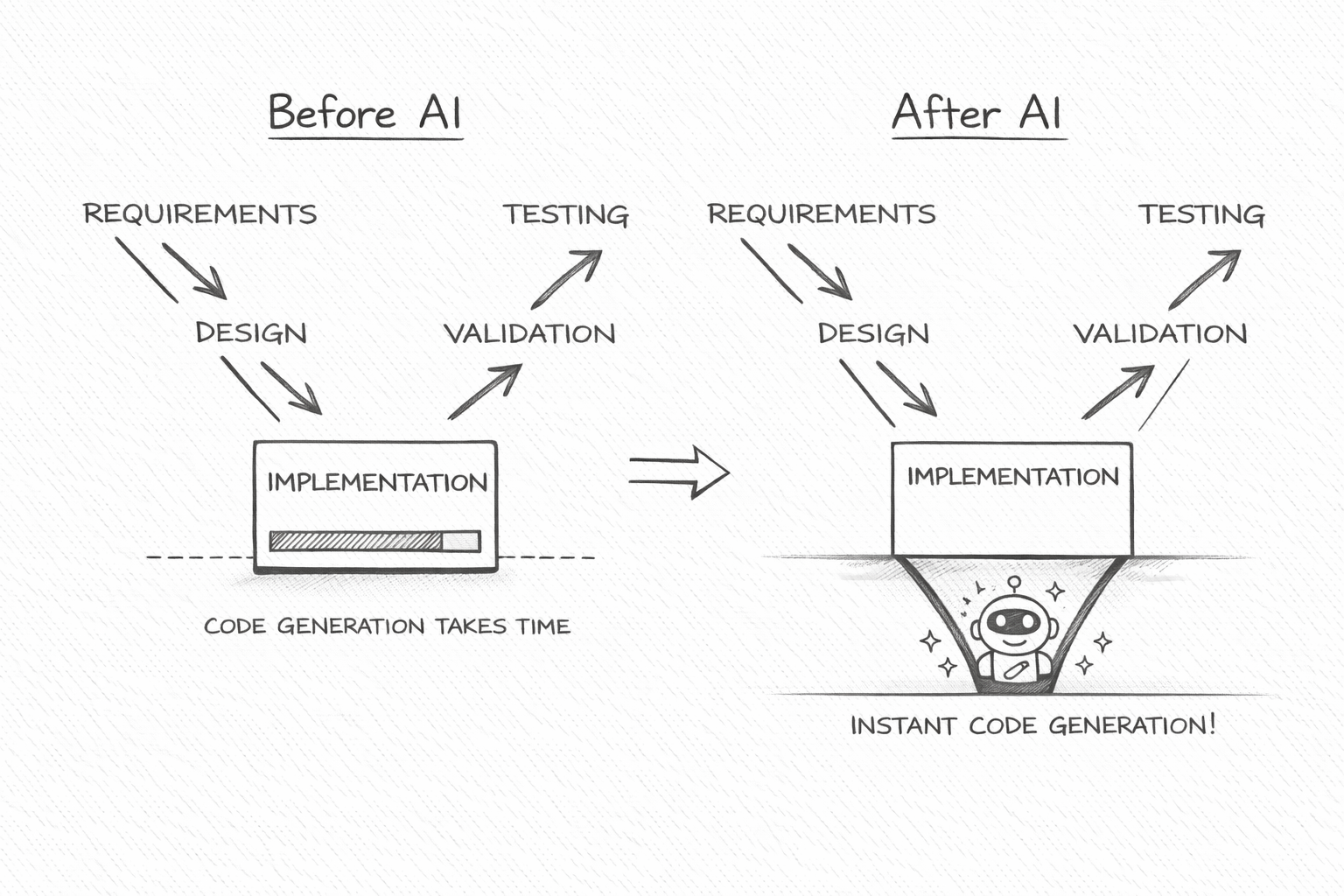

At a high-level, the software development process looks the same as before:

Design -> Implement -> Validate

But, is it really the same?

Implementation, the coding is almost instantaneous now

With AI, the implementation (coding) time has reduced from weeks to days. Earlier, the implementation (coding) used to consume most of the time of the sprint. Now it consumes the lowest time among all three steps (design, implementation, validate).

So as soon as you reach implementation (the bottom of the V shape), the project quickly "bounces" back to the validation phase

Note: I will later cover V-Bounce SDLC model^[1] in more detail later in this article. Remember this "bounce" is the key difference in V-Bounce model from an older SDLC V Model.

And there is much more nuance to notice than just the implementation time.

Natural language is the primary programming language

With AI, the natural language has become the primary programming language. With reliable spec-to-code conversion, the primary asset to maintain has shifted from the codebase to the specification.

Humans are now primarily verifiers

With AI, humans shifted from "creators" to "verifiers". AI implements, while humans focus on providing high-quality inputs (requirements/context/intent/architecture) and verifying the AI's outputs. The old bottleneck was implementation. The new bottleneck is clarity and verification.

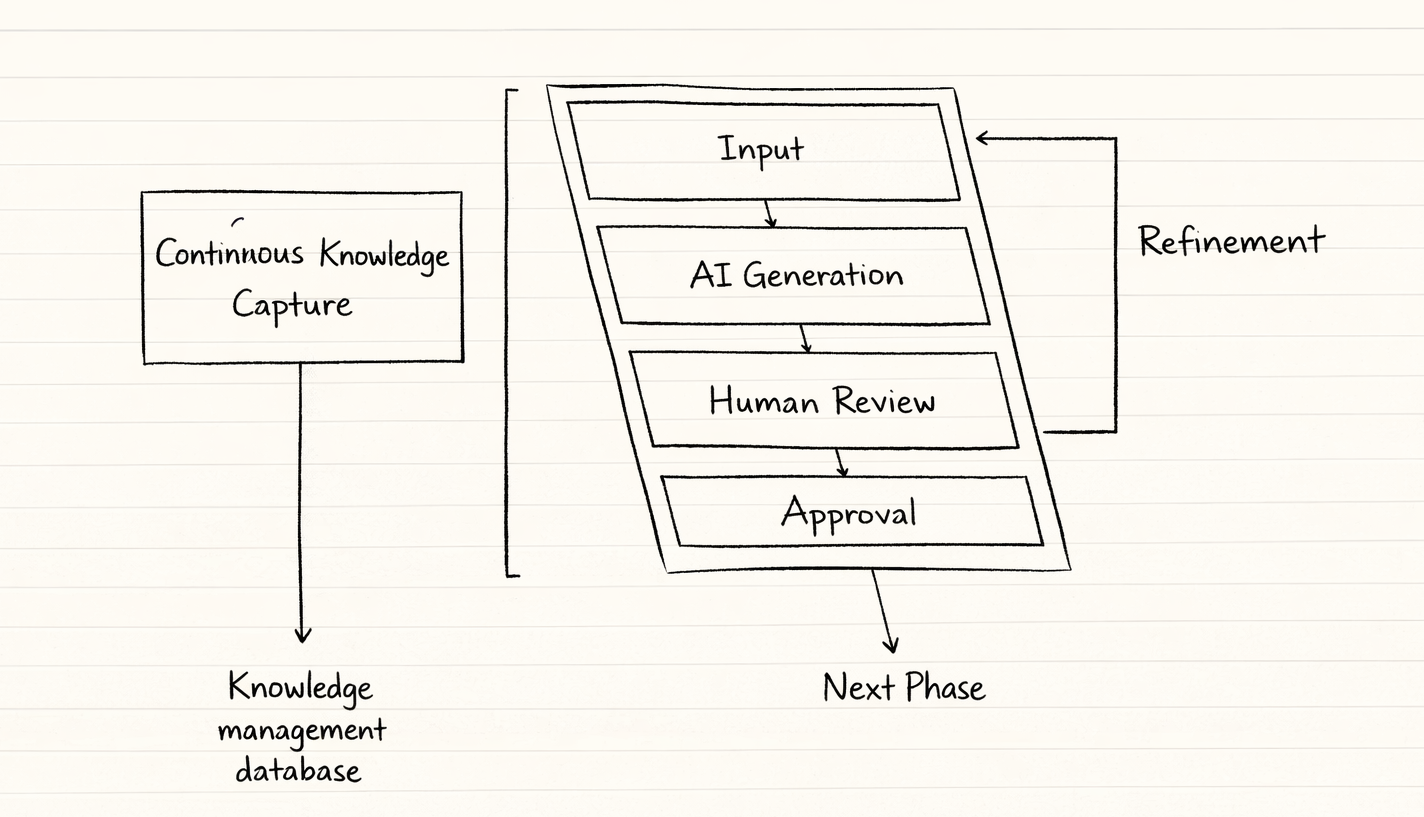

Most of us have either already adopted or will soon adopt this six step AI-native software development:

1. Input: Humans provide natural language requirements or artifacts from the previous phase to the agents.

2. AI Generation: Agents rapidly generate PRDs, designs, or executable code.

3. Human Review: The human acts as the critical verifier, interrogating the output for logic and intent.

4. Refinement: The AI quickly iterates on human feedback in a tight, recursive loop.

5. Approval: Human verifies whether the phase objectives are met or not.

6. Knowledge Capture: AI centralizes Knowledge Management (KM) by automatically capturing insights into a comprehensive knowledge graph, preventing future architectural drift.

These steps occur multiple times every day, making two-week sprint obsolete. Alternatively, the new V-Bounce model^[1] embraces this new reality and highlights the mind-shift we need in our approach towards SDLC.

So we let go of the old two-week sprint.

What else needs to change? Let me narrow that down as the lessons for engineering managers in the world where AI-native SDLC is the new reality.

Lessons for engineering managers

What changes do engineering managers need to make to optimize the modern AI-native development lifecycle?

I will pick my top lessons for the engineering management for the AI-native software development lifecycle.

1. Requirements, architecture, and design are the critical path

Because AI writes the code, the quality of these inputs must be extremely high. This was equally important earlier, but the impact is now instantly visible.

Early on, I made the mistake of assuming clarity without verification. As an engineering manager, I greenlit a project where everyone nodded along to the goal, but I never forced alignment on what “done” actually meant.

Recovery came from stopping the project midstream and rewriting the spec together. Not cleaner, also sharper. One owner, one success metric. One explicit tradeoff we were willing to accept. Progress snapped back immediately.

-- Edward Tian, Founder & CEO, GPTZero

Why bother when vibe coding works?

Vibe coding is: Prompt → Hope → Debug

And yes — it’s fast. Frontier models often produce surprisingly good one-shot results. It feels like magic. Great for POCs. Great for exploration.

But stack a few implicit assumptions. Let a few silent divergences accumulate. Suddenly you’re debugging decisions you never consciously made.

You might think:

So this is just another reminder that PRDs (Product Requirements Document) and HLDs (High-Level Design) matter?

Not quite.

I asked many Engineering Managers about their experience leading AI-native engineering teams. And I find consensus with what Kush shared:

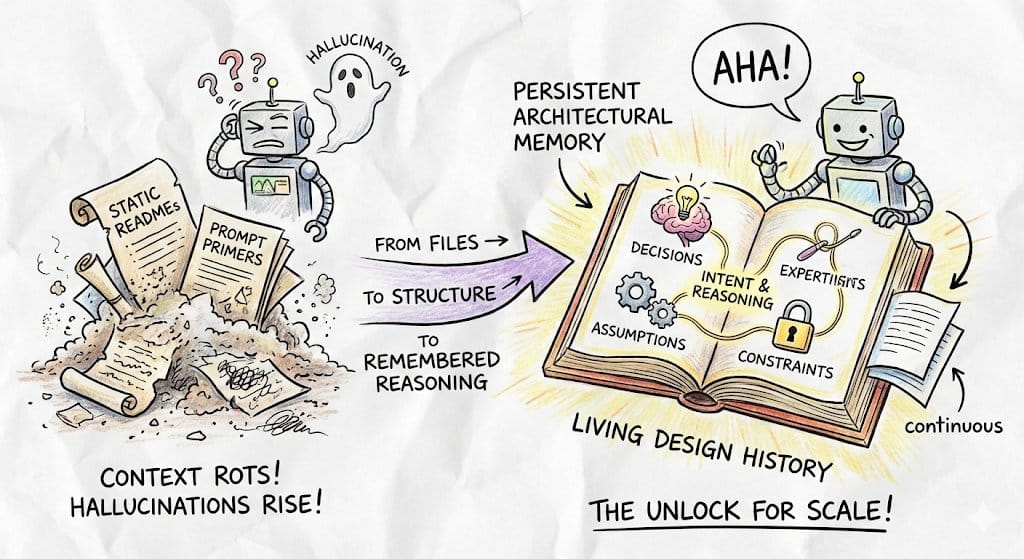

With AI, we were shipping faster than ever, until the progress stalled. READMEs decayed, context rotted, and every new feature required re-explaining the system from scratch. Restating intent began to cost more than generating code. It was not a tooling flaw, it was the absence of continuous architectural memory. We need systems that track decisions, assumptions, and constraints from Day 0 — not just files, but why the system looks the way it does.

-- Kush Mishra, Founder at Neander.ai (Prev. CTO at Noise and Partner at South Park Commons)

In the AI-native SDLC, requirements and architecture aren’t documentation overhead. They are the memory layer that prevents velocity from collapsing into entropy. And drafting the PRDs and HLDs only at the beginning, is not a job done.

We must continuously capture insights into a comprehensive knowledge graph, preventing future architectural drift

Learning for Engineering Managers: Write PRDs and HLDs before you start the code generation. Leverage AI to automate the continuous architecture memory.

2. The specification as the "single source of truth"

Version-control specifications alongside code, this will create the trail where every release is linked to specific spec versions and the tests that validated them. In API development, use the formal specification (like OpenAPI) to generate contract tests. This will ensure that the implementation never drifts from the agreed-upon contract, maintaining perfect traceability between the interface design and the backend code.

At RudderStack, my engineering team didn’t initially trust AI for production development. But as we refined our AI-native SDLC and aligned our approach with the spec-driven-development, it became indispensable for both speed and quality.

1. Our process starts with a PRD. The engineer collaborates with AI to clarify it and produce an HLD. That context, including the HLD, is committed to Git for traceability.

2. Frontier LLM models are used to create an implementation plan. From that plan, the engineer creates Linear tickets. Implementation happens in short bursts using fast, cost-efficient models, alongside test generation and behavior verification.

3. We maintain Agent.md / Claude.md for global context and task-specific skill documents. Engineers are responsible for capturing new decisions and learnings into these files so the agent improves over time.

4. The engineer raises the PR and remains accountable for the shipped code. Code review still takes significant time. CI/CD helps, but human review is non-negotiable.

This process evolved through experimentation. As our skills and architectural memory compound, engineers are increasingly comfortable delegating implementation to AI agents. Next, we’re moving toward dev containers to ensure identical environments for both humans and agents.

-- Mitesh Sharma, Engineering Manager at RudderStack

AI still makes mistakes, especially when faced with too many constraints or detailed specifications. So won't the spec-driven approach backfire?

True, AI is prone to errors and can be overwhelmed by the complexity. Being spec-driven does not mean to abuse the context window unnecessarily. The structured decomposition is the key.

Specify (Start Small) → Plan (Decompose) → Generate → Verify (Human/Test) → Iterate.

As one engineering leader noted

Once we started treating AI as a junior developer rather than a magic box, quality, speed and team confidence all improved.

-- Rehan Ahmed, CTO at Smart Web Agency

You should not treat the AI not as a magic box, but as a "junior developer". You give them clear, small tasks, reviewed frequently, and you assume they will make mistakes until proven otherwise. And this can be done while following the spec-driven development.

3. AI test generation is not optional

When requirements are planned and put together as a spec, simultaneously generate the corresponding tests for validation. So that the code is validated immediately after implementation. Accelerating the validation phase improves productivity by a huge margin.

This creates an immediate "traceability matrix," linking every requirement directly to a test case before a single line of code is written.

AI didn’t make us 10x faster, it made our hidden assumptions visible. Code is cheap now. Decision quality isn’t. The new bottleneck is problem framing, context preservation, and evaluation rigor. We had to redesign our SDLC around traceability and constraint clarity, otherwise AI just scaled confusion.

-- Ritesh Mathur, Co-founder at chetto.ai,

We also need to ensure that when requirements change, the AI identifies exactly which tests need updates. Without an effective AI usage in the automated test generation, it might lead to slowdown because a human will then have to manually find and update the relevant tests repeatedly, which is time-consuming and error-prone.

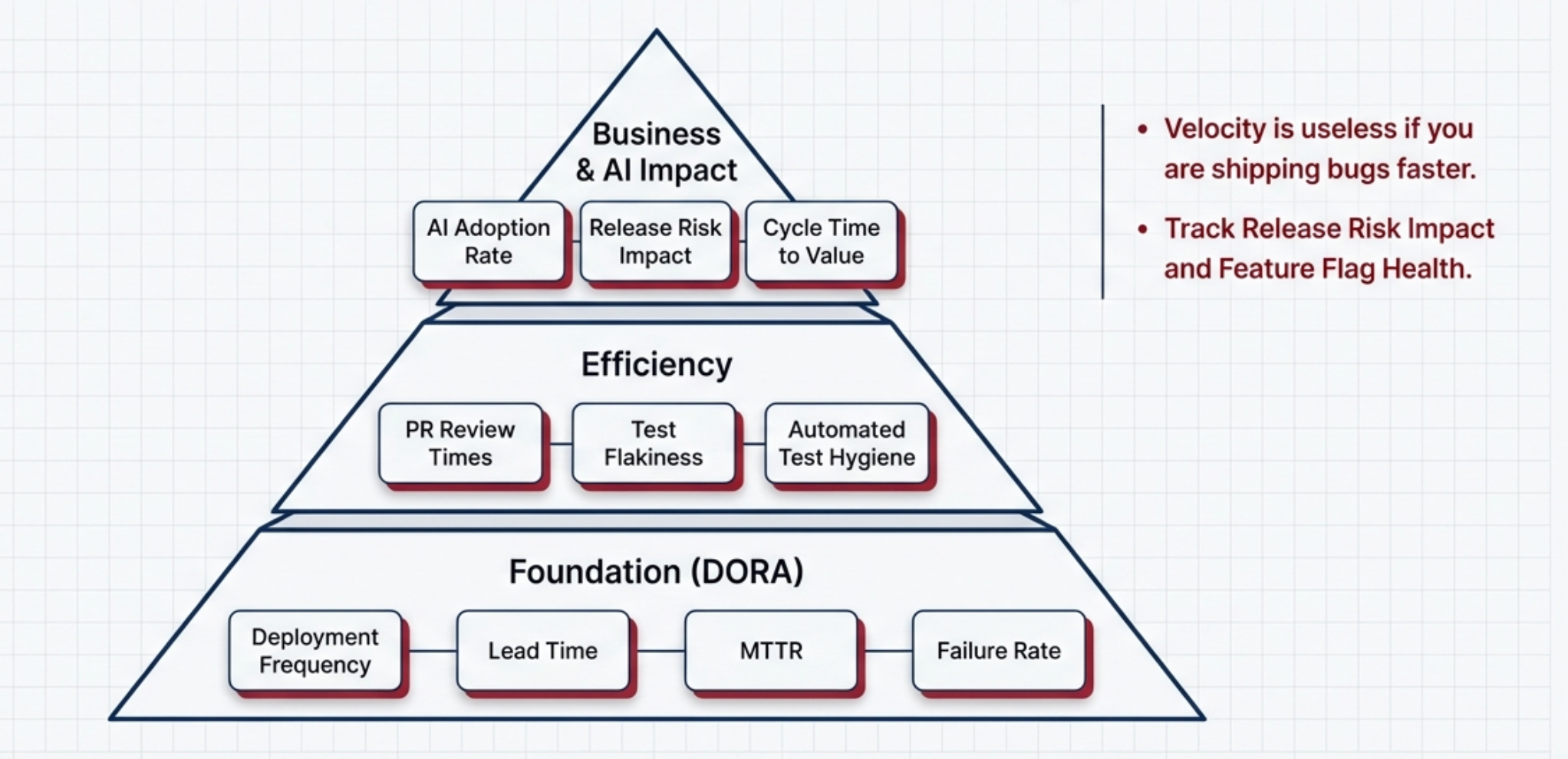

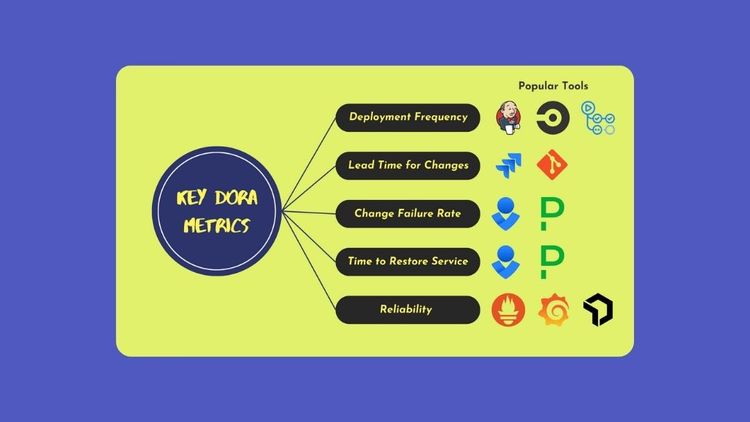

4. Rethink velocity and redefine engineering metrics

Now that we are shipping in days/hours and not weeks, we need to recalibrate how we measure engineering excellence.

Velocity is useless if you are shipping bugs faster

Multiple daily deploys are no longer impressive. The standard is moving toward on-demand instantaneous delivery. Evolution of DORA benchmarks is needed.

5. The evolving role and performance of engineers

Seniority is not defined by the volume of manual code. It is about the ability to provide strong, informed opinions to guide AI outputs.

Senior engineers must be the "context makers" providing the strategic intent of the business.

As Mitesh reflects on his team's journey into AI-native software development

At RudderStack, initially the team was skeptical of using AI in software engineering. We started seeing positive results last year in terms of code quality and engineer performance. Before AI, developers used to get exhausted while writing a lot of code. Now that code generation is fast, developers can focus on making it better. If they don’t like something, they can rewrite it faster. The developer is always accountable for the code they ship using AI. Developers are always in the loop and will always be there, but we see their role changing from writing code to guiding AI, designing guidelines, and building pipelines.

-- Mitesh Sharma, Engineering Manager at RudderStack

When AI is this capable, especially with the Claude Opus 4.6 and GPT 5.3 Codex models, will the engineering managers still be relevant?

Jevons Paradox of economics states that as a resource becomes more efficient to use, its consumption often increases. One key example is how consumption of coal soared after the Watt steam engine greatly improved the efficiency of the coal-fired steam engine. We are seeing a similar story here.

The reduced cost and effort of software development because of AI, are leading to an increased demand for software, rather than a reduction in overall development activity

This increased demand will not only require new AI tooling but also the talented engineering managers who can continue to innovate and structure the new software development lifecycle. The Invide job feed shows that engineering management roles are increasing, while developer roles are decreasing, and the nature of the roles has changed significantly.

Conclusion

When the execution phase of a feature effectively collapses from days to hours, the 14-day cycle becomes an artificial delay. In this article, I analyzed how AI is changing the software development lifecycle and how engineering managers can navigate/capitalize these changes. And it starts by letting go of the old constraints like two-week sprint.

The teams that redesign their lifecycle around architectural memory, traceability, and constraint clarity will compound speed. The rest will just ship confusion faster.

References

- Hymel, C. (2024). The AI-Native Software Development Lifecycle: A Theoretical and Practical New Methodology. Crowdbotics. arXiv. https://arxiv.org/abs/2408.03416

- Piskala, D. B. (2026). Spec-Driven Development: From Code to Contract in the Age of AI Coding Assistants. arXiv. https://arxiv.org/abs/2510.03920

- Bonin, A. L., Smolinski, P. R., & Winiarski, J. (2025). Exploring the Impact of Generative Artificial Intelligence on Software Development in the IT Sector: Preliminary Findings on Productivity, Efficiency and Job Security. University of Gdańsk. arXiv. https://arxiv.org/abs/2508.16811

- Kalluri, R. (2025). Why Does the Engineering Manager Still Exist in Agile Software Development? International Journal of Software Engineering & Applications (IJSEA), 16(4/5), 17–28. https://doi.org/10.5121/ijsea.2025.16502

- Sany, M. R. H., Ahmed, M., Milon, R. H., Labony, R. J., & Rabbi, R. H. (2024). Engineering Ethics and Management Decision-Making. International Journal of Innovative Science and Research Technology (IJISRT), 9(5), 3435–3445. https://doi.org/10.38124/ijisrt/IJISRT24MAY1683

- Reddit. (2025). Does anyone use spec-driven development? r/ChatGPTCoding. https://www.reddit.com/r/ChatGPTCoding/comments/1otf3xc/does_anyone_use_specdriven_development/

Member discussion