Don't Touch Your Face - App to fight COVID-19

This is the written version of the showcase given by Maya at the Git Commit Show 2020. In this , she showcases how she uses transfer learning to build an app that uses computer vision to alert you when you touch your face.

About the speaker

Maya is from Israel. She is a 16-year-old student at Tel Aviv University. At such a young age, she has built a very useful tool that is quite relevant for the time of the COVID-19 pandemic. She uses computer vision to help us out in this situation.

What is "Don't touch your face"?

"Don't touch your face" is a website that detects when you touch your face. The website loads the model that is trained to recognize the face of the person, and whenever someone touches their face, it detects it and beeps. The interesting thing is that it's all done on a browser. They can train it on browsers.

How does it work?

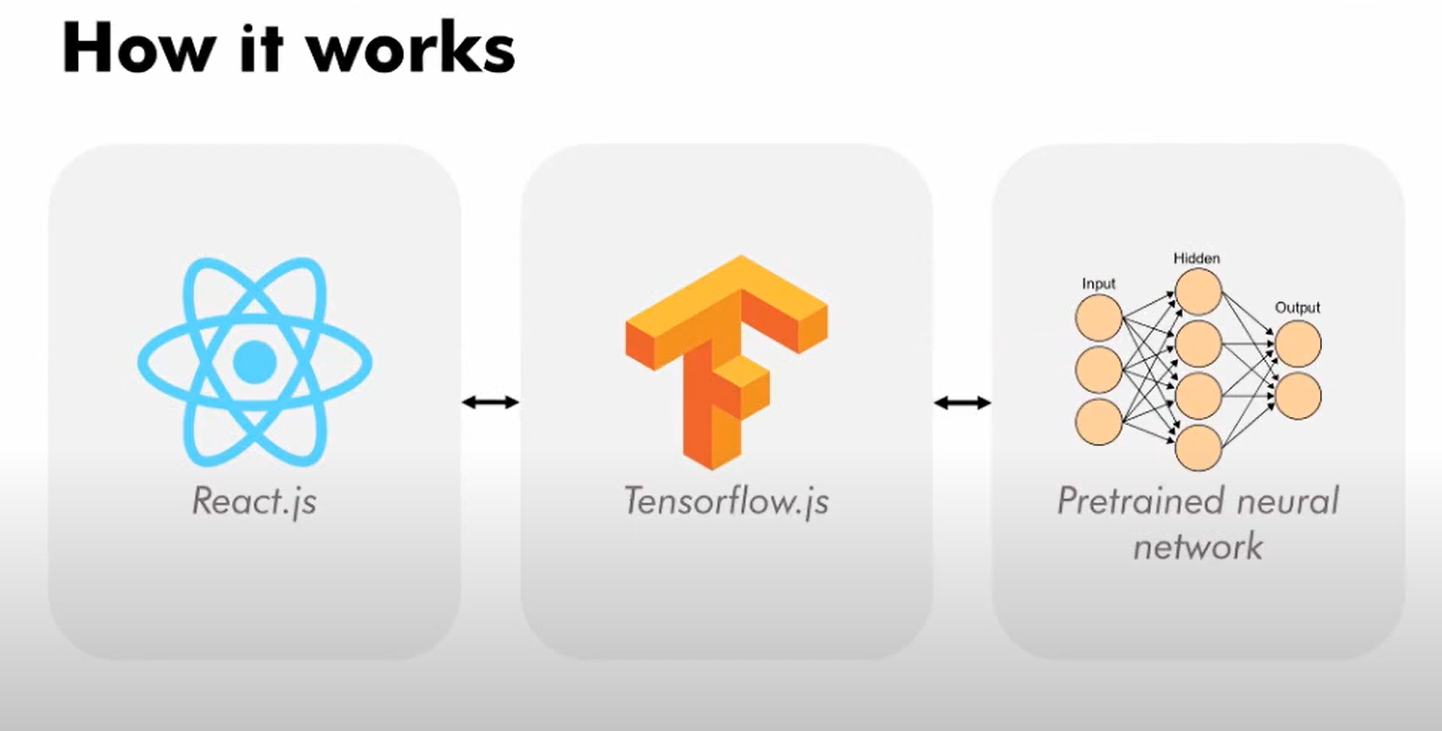

The main tool starring "Don't touch your face" is on the front end, along with tensorflow.js and a pre-trained neural network. The most interesting thing is that it's all happening right on the device and there is absolutely no server running and processing the faces. It runs on your computer, on the browser, which makes it powerful.

The main technology powering this is a type of machine learning code known as "transfer learning"

Transfer learning is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem.

So, maybe we have a solution to some machinery problem and want to apply it to something in the same domain but a bit different or perhaps more specific.

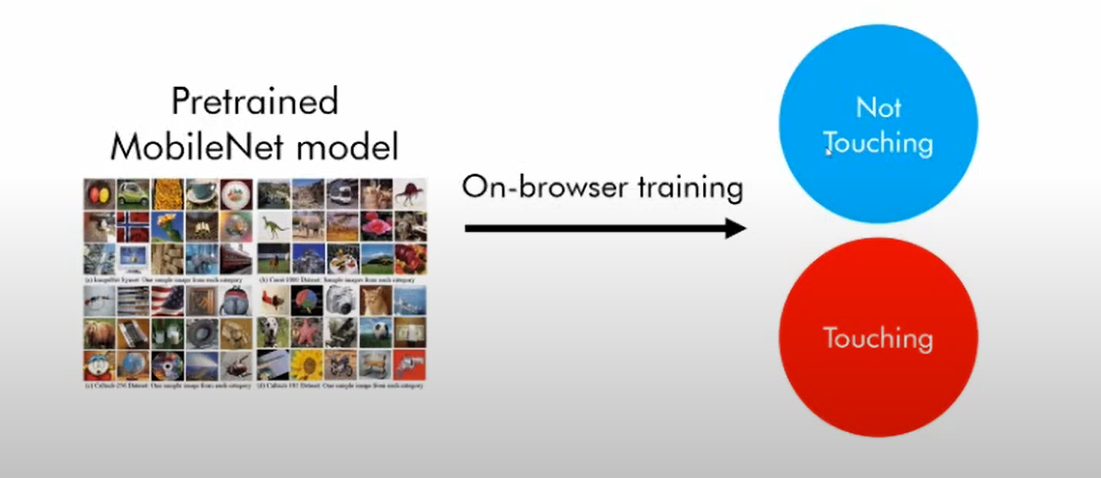

We take a general machine learning model and make it more specific. It may seem counterintuitive. There are two main parts. One is a pre-trained mobile net. The mobile net is a huge convolutional neural network trained with 14 million images in tasks, in something like 30 layers.

It takes an image of anything we need to take, like a cat or a ball or anything, and then it outputs a set of categories for what that thing is. Then we take this result on the face and we train it on the browser using a K nearest neighbor model and output not touching or touching.

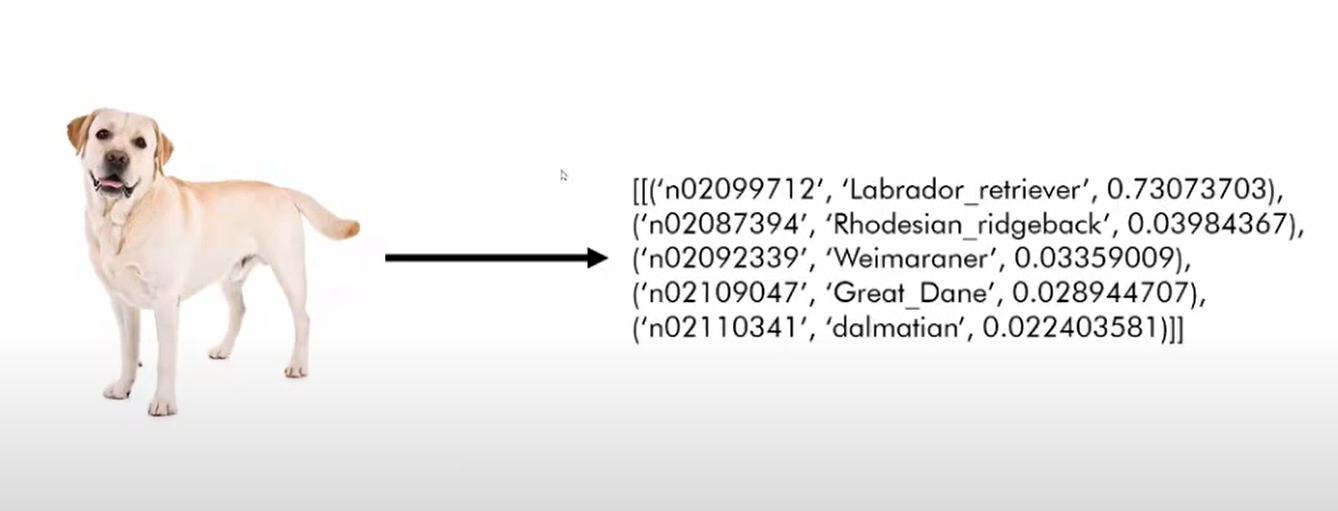

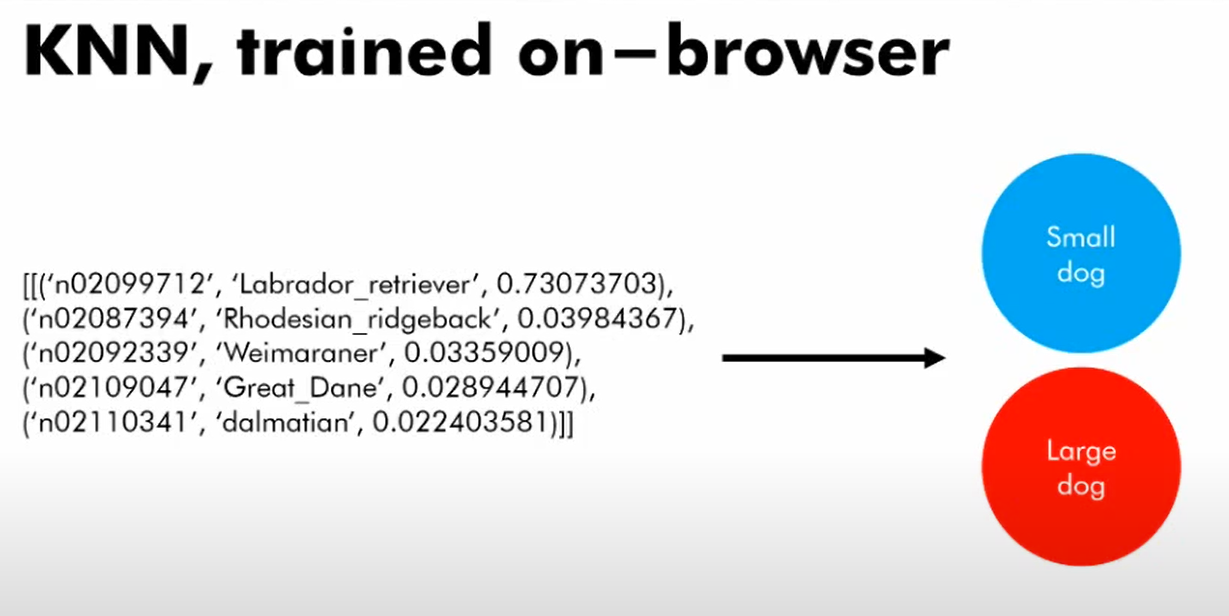

For example, let's take an image of a dog and output whether it's a large dog or a small dog. So, suppose we have an image of the dog and we feed it into the mobile net so it outputs this instead of categories.

So maybe 73% it's is a Labrador Retriever and 4% it's a Radian, and so on. So it's this huge set of categories now, and it doesn't tell me much about whether it's a large dog or a small dog.

So what I may want to do is to gather a data set. It's one of the most powerful things about transfer learning is that it doesn't require much data because most of the data is already fed into the pre-trained model. I can take that, except for just large and small dogs, and feed it into some smaller model that takes in the output of the mobile net and outputs what I want. So, what we have in our app is a K nearest neighbor model, which is the simplest machine learning model you can imagine. It takes some input, and then it looks at all of the inputs it has so far. Then it takes the K nearest ones and then just takes the majority vote. If the nearest examples to it were large dogs, it would say "yeah, it's a large dog."

So, in short, we train a KNN model. It takes in the output of the mobile net, here a dog in our case, features of the face, and outputs a small dog or larger in or in our example, touching or not touching.

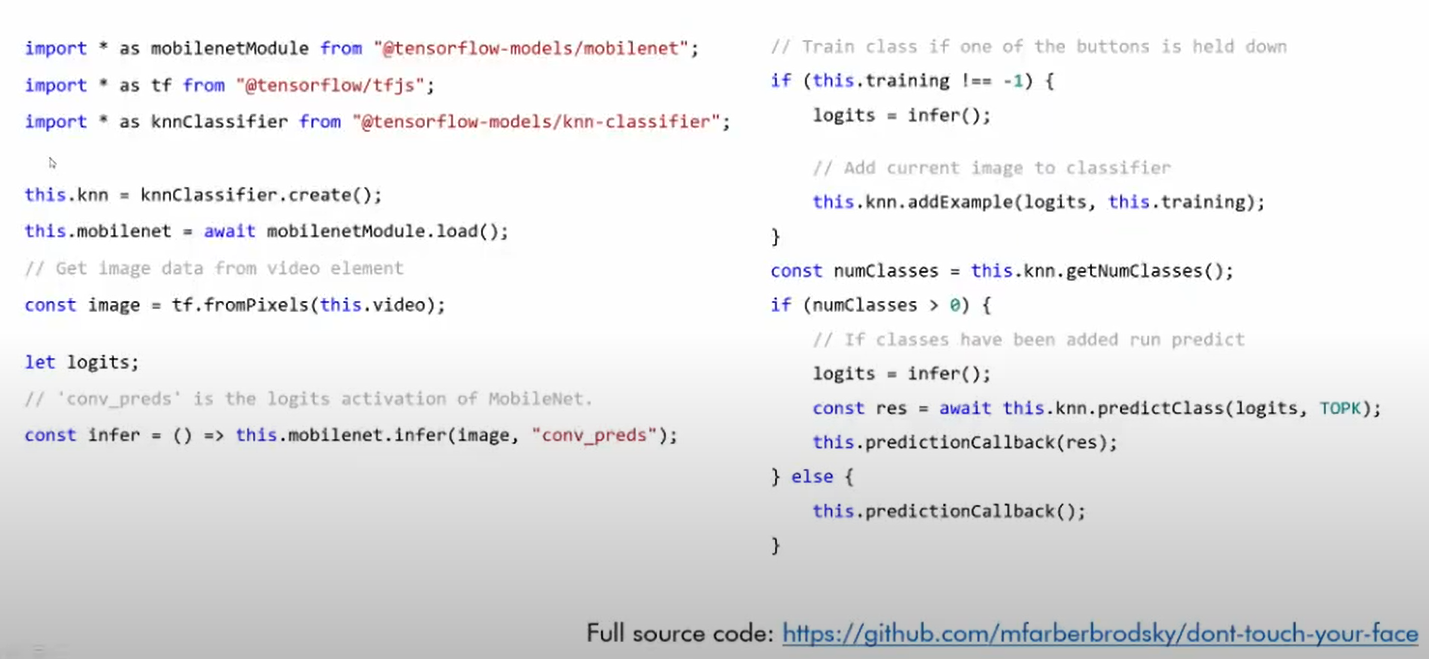

It's all done using Tensorflow and this is the code.

We're importing Bobbinet, which is this trained network. We're loading it and then each time we get an image, we take it from the video element, we run the mobile net. It infers another prediction of the mobile net, and then if we're training, we add it as an example to the KNN model. Whenever we're predicting, we first run the mobile net we're referring to and then we run to KNN over it.

And that's the prediction.

You can learn more on Google teachable machine, which is a fantastic website that allows you to do the training right in the browser.

For more such talks, attend Git Commit Show live. The next season is coming soon.

![OS for Devs - Ubuntu vs nixOS vs macOS [Developers Roundtable]](/content/images/size/w750/2023/12/IMG_20231216_204048_584.jpg)

Member discussion